Economy Chinese startup DeepSeek hammers US stocks with cheaper open-source AI model

- Thread starter Sweater of AV

- Start date

- Joined

- Jun 13, 2014

- Messages

- 14,150

- Reaction score

- 12,348

paper, gunpowder, printing, and synthetic insulin say hi..way too early to be drawing any conclusions on this, but historically, China isn't known for it's innovation.

Aren't those the cars that are known for spontaneously blowing up?

You mean like Telsas? Because those spontaneously blow up too.

- Joined

- Oct 14, 2007

- Messages

- 6,511

- Reaction score

- 2,474

oh look at shook americans

same happened when Japan overtook USA in 70s with its tech.

naturally, americans couldnt handle it so they curbed japanese exports to the point of them not being competitive anymore

but china is too big for that shit

while US has been bickering about trans people in bathrooms, chinese have been going full steam ahead with investments in tech sector

chinese cities already look like something out of blade runner, while american cities are either burning, filled with homeless or have unusable public transport

same happened when Japan overtook USA in 70s with its tech.

naturally, americans couldnt handle it so they curbed japanese exports to the point of them not being competitive anymore

but china is too big for that shit

while US has been bickering about trans people in bathrooms, chinese have been going full steam ahead with investments in tech sector

chinese cities already look like something out of blade runner, while american cities are either burning, filled with homeless or have unusable public transport

- Joined

- Mar 7, 2022

- Messages

- 2,288

- Reaction score

- 3,862

Maybe, but it's also innovative.

A deep dive into DeepSeek's newest chain of though model

El Reg digs its claws into Middle Kingdom's latest chain of thought modelwww.theregister.com

According to these guys, it offers improved performance and a much lower cost. I wouldn't use Chinese tech like that anyway, but if your only goal is cheap and fast, it's apparently a good solution.

Isn't that the goal of most American and multinational corporations anyway? Cheap and fast. It's just a problem because it is Chinese? My question is if the government keeps giving concessions and subsidies to all these corporations in America and their heads (i.e. Musk and Zuckerberg) and American companies keep getting their shit pushed in by foreign entities shouldn't we re-evaluate the concessions given to them?

I mean China is beating America on EV production and all America has is the terrible looking Cybertruck and alternatives that don't look good such as Rivian. Also, it's not like America has learned the lesson. I mean, for example, a lot of people use Microsoft products but no matter how much money they get they stay behind compared to Linux in certain aspects.

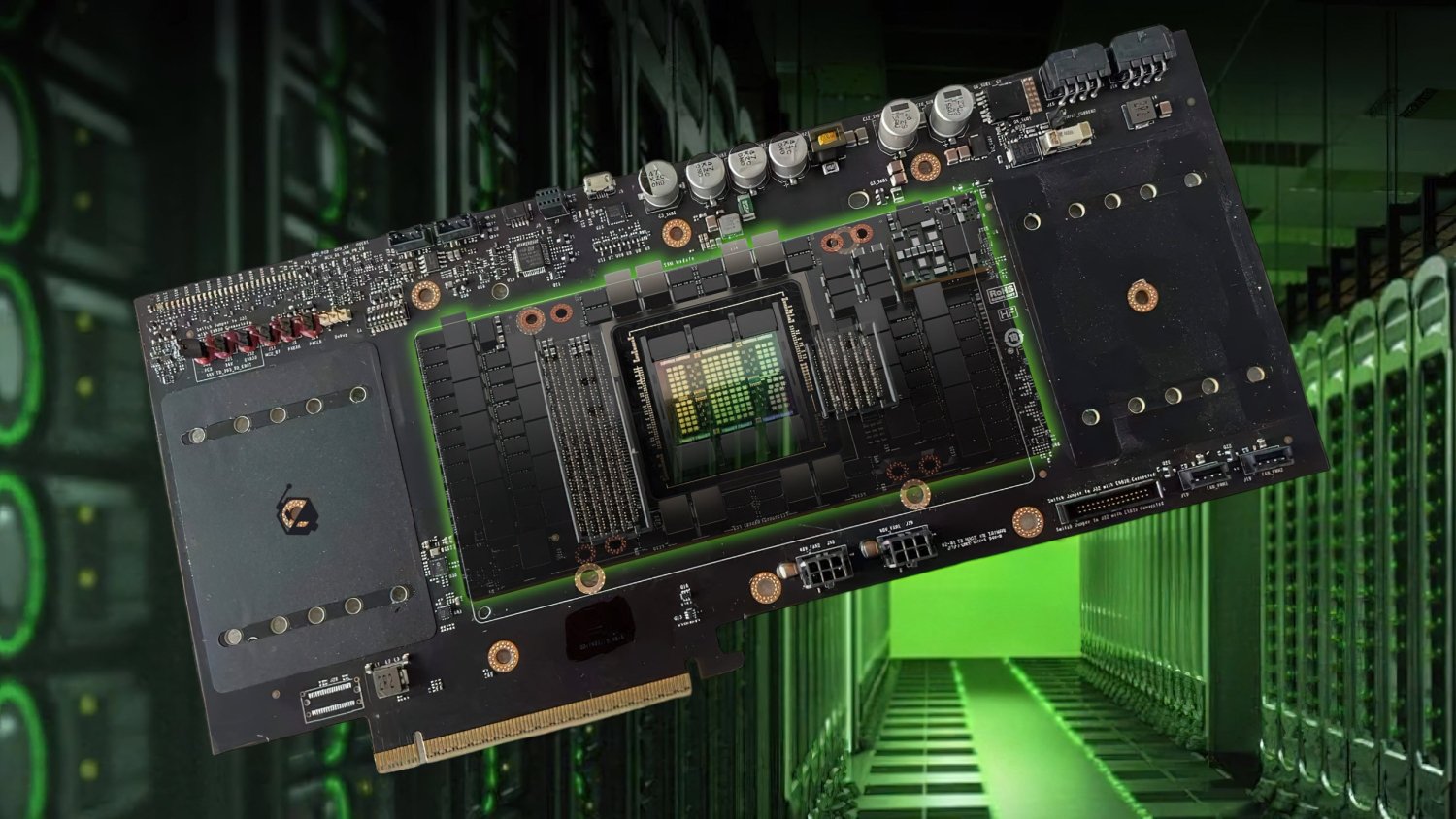

I have heard that they are doing it with nvidia chips that they actually aren't supposed to have.. is that true?

Every company that trains models this large does so with Nvidia hardware. That's why it has a $3 trillion market cap.

There are certain GPUs that Nvidia can no longer export to China namely the H100. Nvidia has created several weaker GPUs to get around the export ban and legally sale to China. The H100s Chinese equivalent is the H800. There are some reports that the Chinese used H100s for this model. And there are other reports that say H800s were used. So it's not clear.

But what's important about this model is that it's way more efficient to train. So if it were trained on H100s then they wouldn't need nearly as many as OpenAI has needed for its models (so few that they wouldn't need to mass import and couldn obtain them through back channels). And it's also possible that it's May have been able to train it on H800s as well.

Last edited:

- Joined

- Aug 20, 2009

- Messages

- 48,708

- Reaction score

- 32,534

Re: the bold, there are issues with anti-competitive practices, IP theft, and state control of (or ability to control when deemed necessary) sensitive information about customers.Isn't that the goal of most American and multinational corporations anyway? Cheap and fast. It's just a problem because it is Chinese? My question is if the government keeps giving concessions and subsidies to all these corporations in America and their heads (i.e. Musk and Zuckerberg) and American companies keep getting their shit pushed in by foreign entities shouldn't we re-evaluate the concessions given to them?

I mean China is beating America on EV production and all America has is the terrible looking Cybertruck and alternatives that don't look good such as Rivian. Also, it's not like America has learned the lesson. I mean, for example, a lot of people use Microsoft products but no matter how much money they get they stay behind compared to Linux in certain aspects.

Are you aware that Tik Tok collects information about what people are doing with their phones even when they aren't using the app, and that the Chinese government can walk in and take that data pretty much anytime they want? And that's just an example.

- Joined

- Mar 7, 2022

- Messages

- 2,288

- Reaction score

- 3,862

Re: the bold, there are issues with anti-competitive practices, IP theft, and state control of (or ability to control when deemed necessary) sensitive information about users.

Aren't these all things that companies like Meta and Google already do? Everything you listed above we already allow companies on American soil to do openly with the right "people". The only difference is that Chinese companies don't give money to American Political campaigns (or maybe they do).

Isn't that the goal of most American and multinational corporations anyway? Cheap and fast. It's just a problem because it is Chinese? My question is if the government keeps giving concessions and subsidies to all these corporations in America and their heads (i.e. Musk and Zuckerberg) and American companies keep getting their shit pushed in by foreign entities shouldn't we re-evaluate the concessions given to them?

I mean China is beating America on EV production and all America has is the terrible looking Cybertruck and alternatives that don't look good such as Rivian. Also, it's not like America has learned the lesson. I mean, for example, a lot of people use Microsoft products but no matter how much money they get they stay behind compared to Linux in certain aspects.

The country that dominates in AI will dominate the world. The economic ramifications should be obvious. It's a multi-trillion dollar industry. Maybe 10s or even 100s of trillions. And will have some effect on basically every existing industry. As big or even bigger than the impact of personal computers and the Internet.

But there are also military implications too. Not just in a autonomous weapons but in the scale and speed at which new advanced weapons can developed.

- Joined

- Aug 20, 2009

- Messages

- 48,708

- Reaction score

- 32,534

Even if we take it as a given that "we already allow companies on American soil to do so openly", if you think that's the only reason then discussing it further is pointless.Aren't these all things that companies like Meta and Google already do? Everything you listed above we already allow companies on American soil to do openly with the right "people". The only difference is that Chinese companies don't give money to American Political campaigns (or maybe they do).

Last edited:

- Joined

- Jan 20, 2004

- Messages

- 33,814

- Reaction score

- 26,131

Someone got Deepseek biggest model running on 8 mac minis lol.

This is a big deal as far as token cost goes.

| DeepSeek V3 671B (4-bit) | 2.91 | 5.37 |

| Llama 3.1 405B (4-bit) | 29.71 | 0.88 |

| Llama 3.3 70B (4-bit) | 3.14 | 3.89 |

[th]

Model

[/th][th]Time-To-First-Token (TTFT) in seconds

[/th][th]Tokens-Per-Second (TPS)

[/th]

Last edited:

- Joined

- Sep 13, 2007

- Messages

- 16,424

- Reaction score

- 20,030

7 Teslas per day are spontaneously blowing up? Because that is what the reports are saying about the BYD cars.You mean like Telsas? Because those spontaneously blow up too.

- Joined

- Sep 3, 2014

- Messages

- 30,206

- Reaction score

- 80,826

Maybe, but it's also innovative.

A deep dive into DeepSeek's newest chain of though model

El Reg digs its claws into Middle Kingdom's latest chain of thought modelwww.theregister.com

According to these guys, it offers improved performance and a much lower cost. I wouldn't use Chinese tech like that anyway, but if your only goal is cheap and fast, it's apparently a good solution.

Damn. You're actually right for a change.

The key here is that it is (A) Open Source and thus available to all and the innovations for it will likely occur much faster, and (B) it takes less energy which is a huge deal with today's AI and our shortage of electricity when it comes to the expansion of AI, Blockchain, and EVs where are all energy hogs.

- Joined

- Sep 3, 2014

- Messages

- 30,206

- Reaction score

- 80,826

The AI I really want is one where it can be located and perform on my machine without the need for processors elsewhere. There are a few reasons for my desire.

1. It keeps my queries and the information I want as mine. Even if my IP (Local AI) reached out to other sources to answer my questions, it can be done with a VPN to shield me from intrusive technology companies that want to know my very world.

2. It will require far less energy and as an energy / technology professional I know what energy crunch is coming. It's a big deal to find an AI that uses less energy.

If this AI can eventually pull those two things off... I'm interested.

1. It keeps my queries and the information I want as mine. Even if my IP (Local AI) reached out to other sources to answer my questions, it can be done with a VPN to shield me from intrusive technology companies that want to know my very world.

2. It will require far less energy and as an energy / technology professional I know what energy crunch is coming. It's a big deal to find an AI that uses less energy.

If this AI can eventually pull those two things off... I'm interested.

- Joined

- Mar 28, 2018

- Messages

- 1,321

- Reaction score

- 1,661

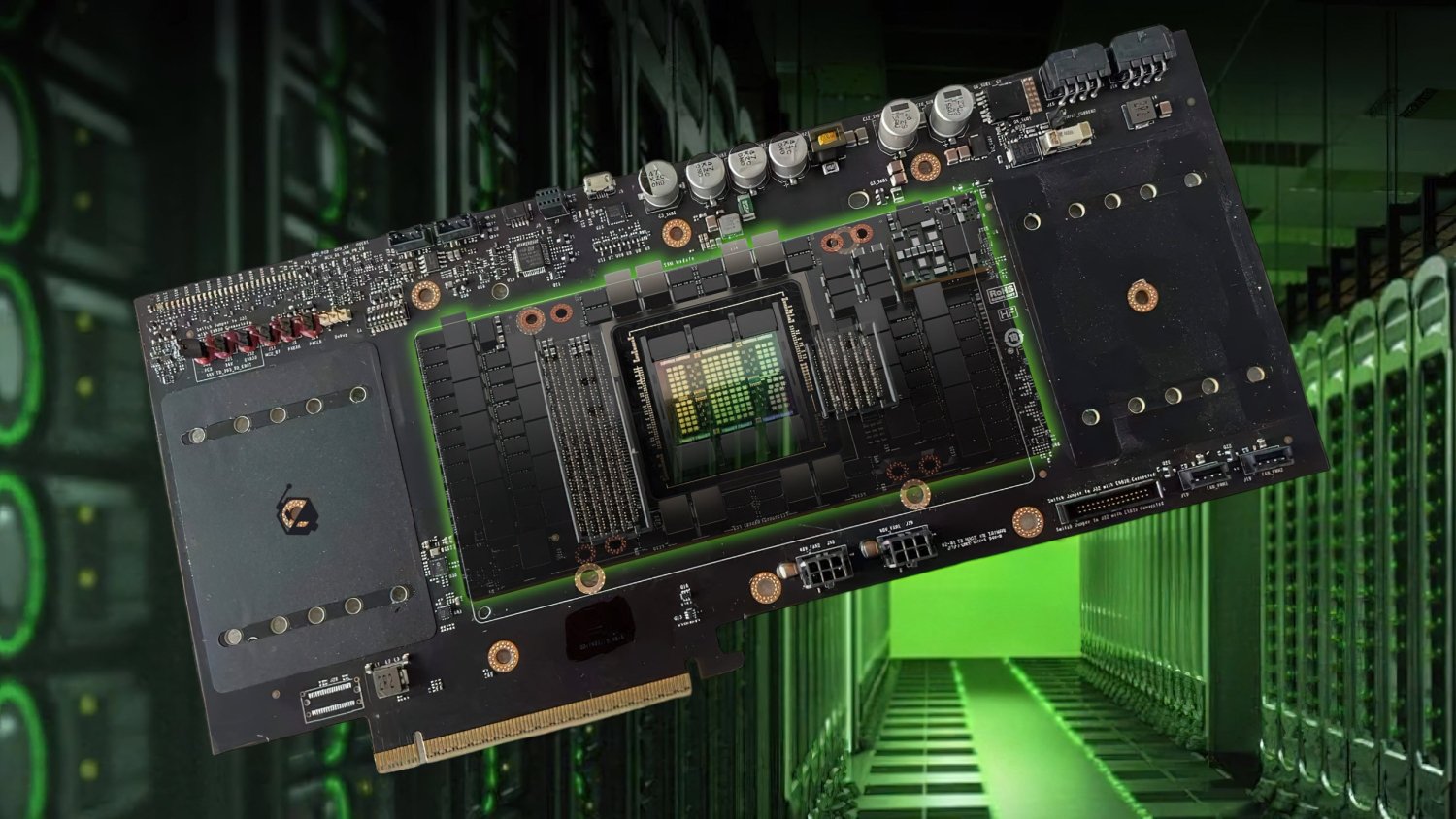

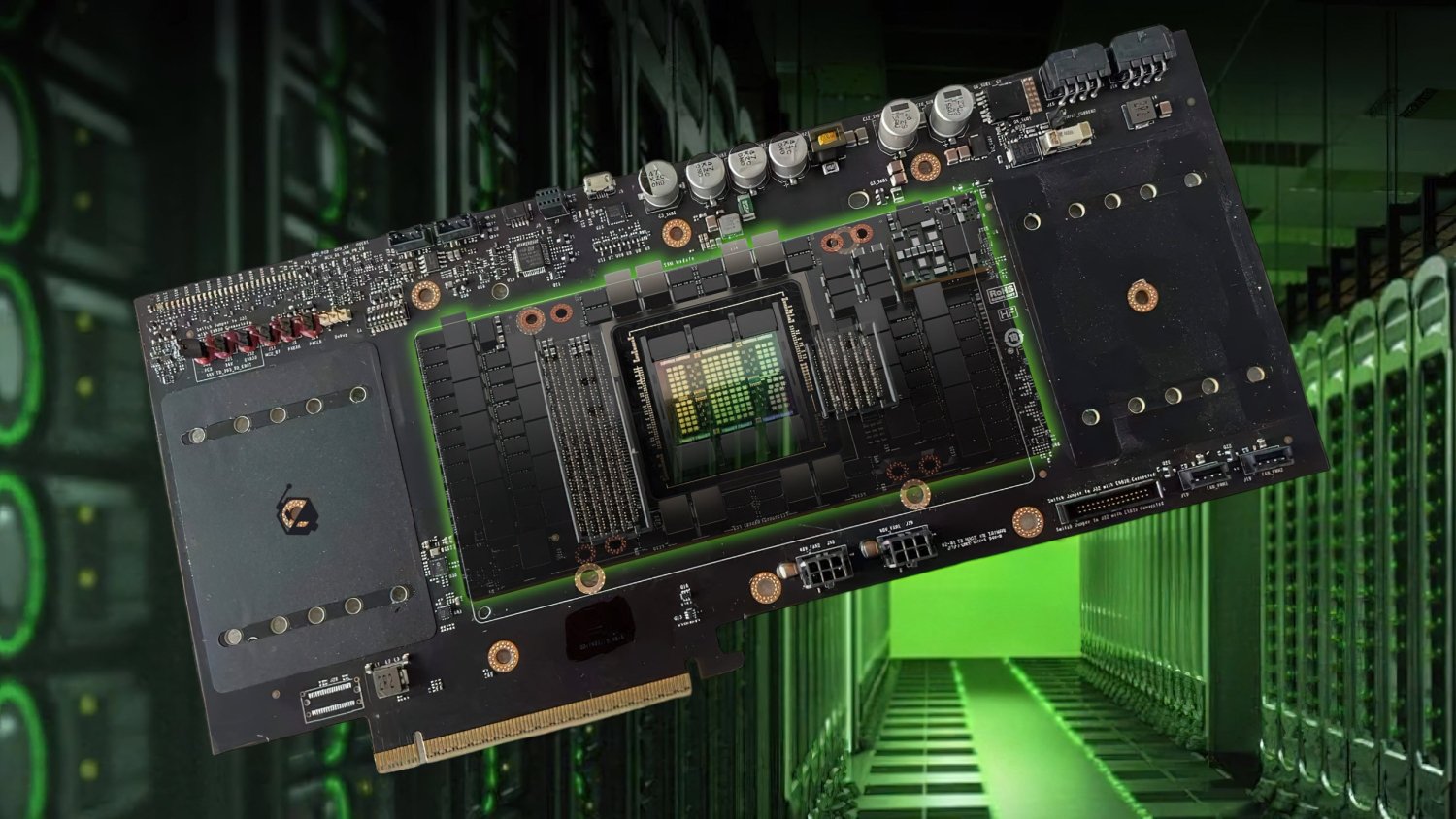

That's not a Chinese chip you're talking to.

www.tweaktown.com

NVDA powered, doggies.

www.tweaktown.com

NVDA powered, doggies.

and they cost billions.

Price point is more fake than a plastic burger.

Chinese AI firm DeepSeek has 50,000 NVIDIA H100 AI GPUs says CEO, even with US restrictions

Chinese AI company DeepSeek says its DeepSeek R1 model is as good, or better than OpenAI's new o1 says CEO: powered by 50,000 NVIDIA H100 AI GPUs.

and they cost billions.

Price point is more fake than a plastic burger.

- Joined

- Jan 20, 2004

- Messages

- 33,814

- Reaction score

- 26,131

Tweektown full of sh$t the CEO said a number of times was done on older H800 cards because they could not get their hands on newer H100 cards due to import restrictions and costs. This is about amount of memory they have available to build their models. In what has turned out pretty inventive they decided not to use 32 bit accuracy but 8 decimal one. Saved them time and significant amounts of processing.That's not a Chinese chip you're talking to.

NVDA powered, doggies.

Chinese AI firm DeepSeek has 50,000 NVIDIA H100 AI GPUs says CEO, even with US restrictions

Chinese AI company DeepSeek says its DeepSeek R1 model is as good, or better than OpenAI's new o1 says CEO: powered by 50,000 NVIDIA H100 AI GPUs.www.tweaktown.com

Similar threads

- Replies

- 8

- Views

- 362

Latest posts

-

-

Law Democrats move to stop U.S. from joining Israel's military campaign against Iran

- Latest: Aegon Spengler