- Joined

- Feb 17, 2020

- Messages

- 3,685

- Reaction score

- 9,225

I have a feeling the rtx 4080 will be a lot harder to obtain than the rtx 4090

Wait for 11/3.is there somewhere I can pre order an RTX 4080?

You should wait. Those "alleged benchmarks" are worthless. We won't know what it can do until its out.https://hothardware.com/news/13900k-clobbers-7950x-alleged-benchmark-slides

I think I have made the choice. But will wait 2-3 weeks for few youtube videos just to confirm.

@Madmick

You should wait. Those "alleged benchmarks" are worthless. We won't know what it can do until its out.

Its out today.

This is the spec I will be getting. Can you please have a look and advice for and against?

I am recycling GPU and SSDs

As it looks; $2500. In 2-3 years time I will be updating GPU to something better and more recent at that time, expect this to have for another 10 years. My alienware bought in 2012 is retiring, served me well, only issue I had in 2016 when PSU failed and had to replace it. Ran it 24/7 for the entire time, maybe combined week of downtime. 2018 saw replacement of GPU.

@Madmick

View attachment 948863

I can’t answer that without knowing what you want out of it first. If you simply want it to run games at an acceptable level, the answer is probably yes it will last 10 years. Obviously, 5-6 years from now it will likely be showing it’s age some.Its out today.

This is the spec I will be getting. Can you please have a look and advice for and against?

I am recycling GPU and SSDs

As it looks; $2500. In 2-3 years time I will be updating GPU to something better and more recent at that time, expect this to have for another 10 years. My alienware bought in 2012 is retiring, served me well, only issue I had in 2016 when PSU failed and had to replace it. Ran it 24/7 for the entire time, maybe combined week of downtime. 2018 saw replacement of GPU.

@Madmick

View attachment 948863

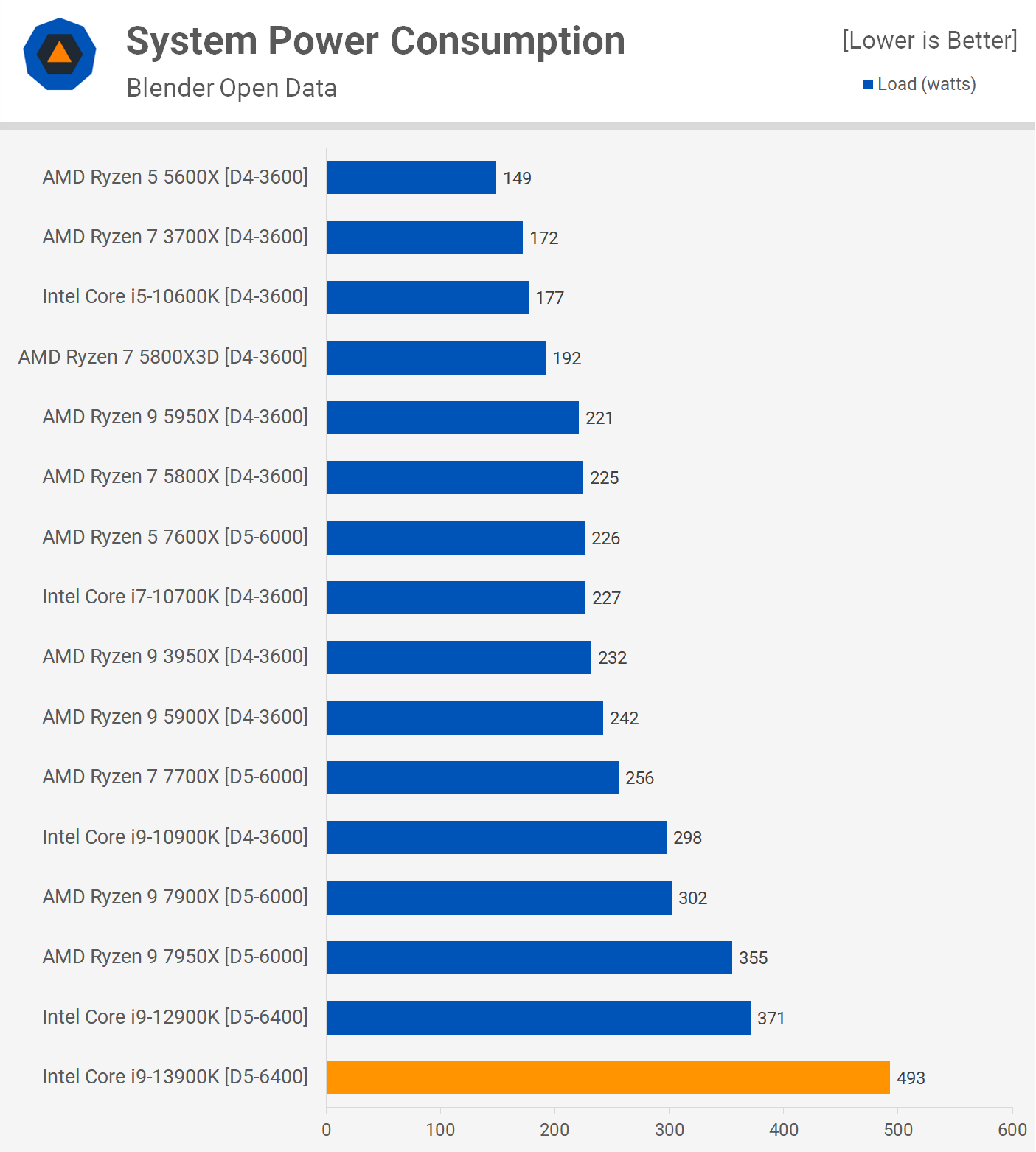

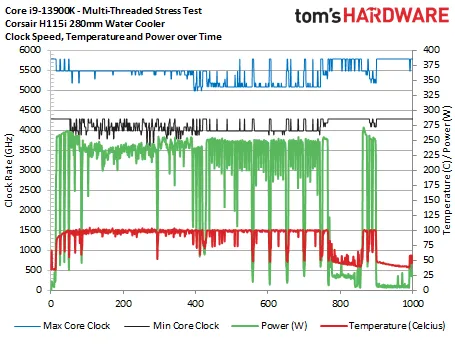

These aren't OC'd wattage draws. These aren't stress tests, either (ex. Furmark, PRIME95), except the Tom's Hardware jpeg. For Techspot, this system...Not planning to OC. Just for gaming

These aren't OC'd wattage draws. These aren't stress tests, either (ex. Furmark, PRIME95), except the Tom's Hardware jpeg. For Techspot, this system...

...is drawing 493 watts in Blender. Blender.

- i9-13900K

- MSI CoreLiquid S360 360mm CLC

- MSI MPG Z790 Carbon WiFi DDR5

- G. Skill Trident Z5 RGB DDR5-6400 RAM [2x16GB, Single Rank]

- m.2 NVME SSD (unspecified)

- MSI Prospect 700R MidATX

Meanwhile, the RTX 4090 Founder's Edition is drawing a sustained max load of ~470W with peak spikes up to ~500W. Indeed, the typical max gaming power draw is ~350W, but Tom's Hardware showed it routinely drawing ~450W in the Metro Exodus Loop. And, indeed, the power draw on the CPU side from games won't be as intensive as Blender, but it's another example where it won't be a long way off in the most intensive games.

I've been someone who has battled against excessive PSU recommendations for the past decade, but even I won't tell you 1000W is sufficient for that pairing. It's insufficient even for the most shoestring assembly of components with a 13900K + 4090. Any extra fans, drives, CPIe cards, attached external devices (even if temporary), and your draw increases.

Now, if you're going to get a lesser GPU, cool, but just be aware, there is the potential a 1000W PSU will constrain your GPU selection in the years moving forward if you choose the 13900K.

If you have a 1080, now, and you know you intend to get a less power-hungry GPU when you upgrade, the 1000W should be fine. I'm just highlighting how absurd the power draw is with the 13900K. Just make sure that wattage pads you sufficiently to meet the demand for a hypothetical long-term idea you have in your head for what your comp could grow to contain:What PSU would you advise then? Atm Im using 1080, not Ti.

Also when I upgrade my GPU at some point I will be aiming for something less power hungry

If you're just gaming then why the i9?Not planning to OC. Just for gaming

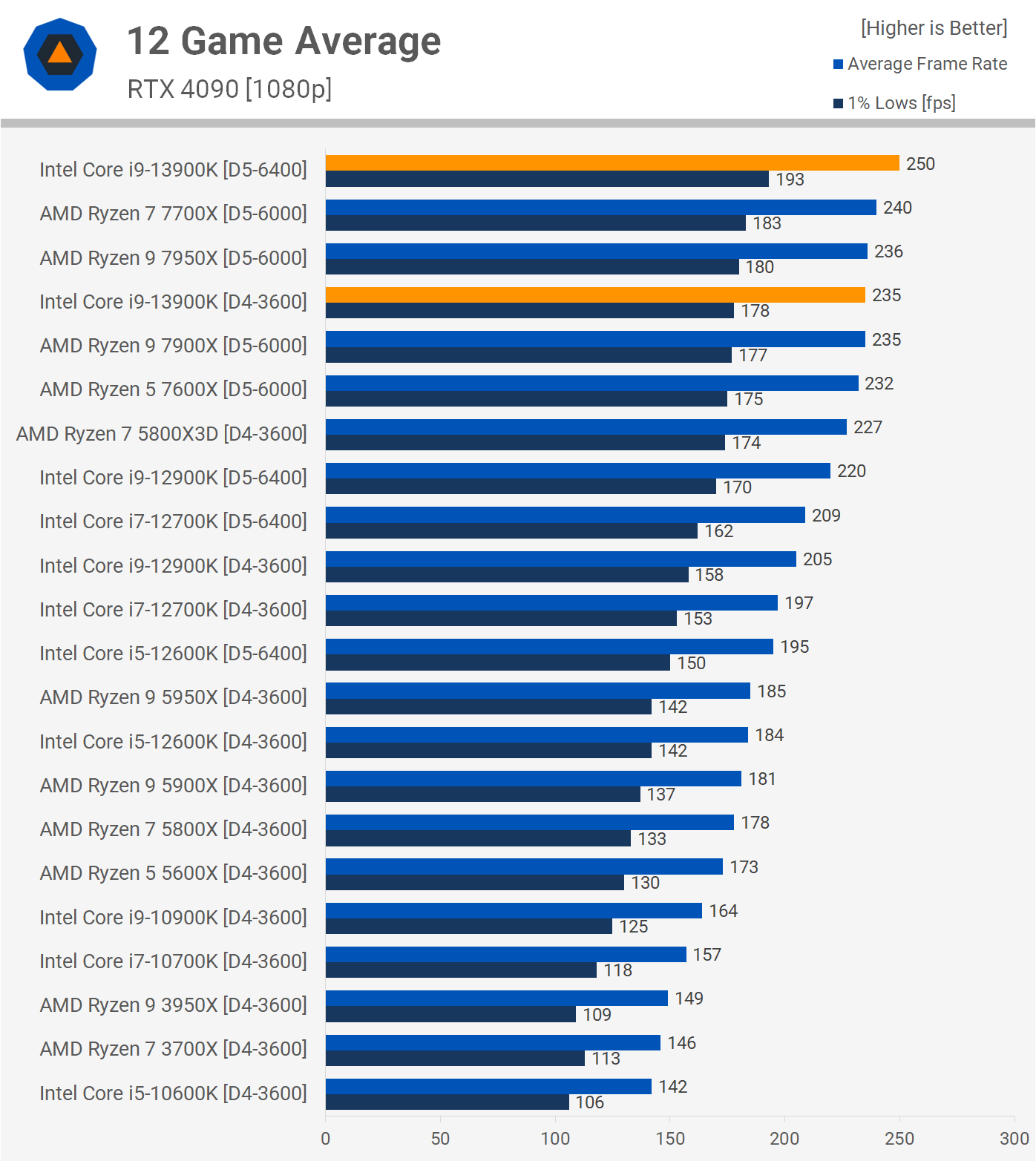

I’ve been looking for benchmarks of the 4090 paired with the new CPUs at 4k but haven’t seen any. I’d like to see if the 4090 is able to break out of the gpu bottleneck. Almost all the charts I’ve seen though are 1080, with a few at 1440..

If you have a 1080, now, and you know you intend to get a less power-hungry GPU when you upgrade, the 1000W should be fine. I'm just highlighting how absurd the power draw is with the 13900K. Just make sure that wattage pads you sufficiently to meet the demand for a hypothetical long-term idea you have in your head for what your comp could grow to contain:

https://outervision.com/power-supply-calculator

I will iterate that I think the 13900K is a terrible choice for someone who is only interested in gaming. Godawful value, and now you can see how extreme it's power consumption and heat output are. Why get this CPU if not for epeen? And if epeen is the pursuit, then why isn't the 4090 (or the future 4090 Ti) the desired GPU?

After all, if you've followed recent posts on Zen 4 and Alder/Raptor Lake comparisons, when you look at that chart, you should be paying attention to the DDR5 RAM speeds. The 13900K depends on its superior RAM scaling and ceiling frequency support to get to the top of that gaming chart. Notice that on DDR4-3600 RAM it's losing to both the 7700X and 7950X. Meanwhile, you've got DDR5-5200 RAM selected, meaning the 13900K might be neck-and-neck on Techspot's chart if that speed of RAM is used. It's also digustingly overpriced RAM, I might add. You're really gonna sink over £340 into 64GB of sub-6000MHz RAM? That's $380 USD. $380 on fucking memory? To go with the 13900K...but then not get the 4090? What are we doing, here?

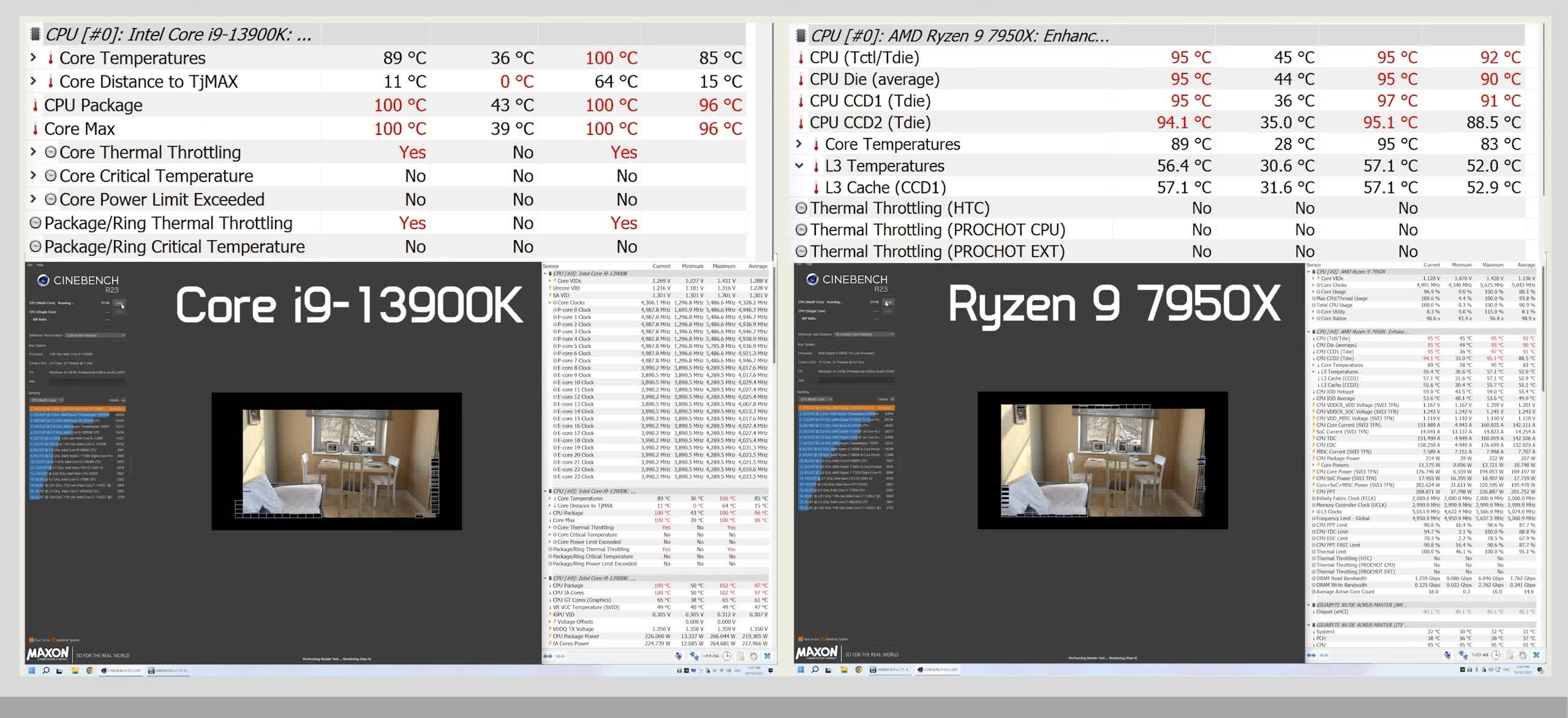

And the Corsair H100i to cool it? When you can plainly see this generation (either Zen 4 or Raptor Lake) runs up to a thermal limit, 100C in the 13900K's case, and lets the strength of the cooling determine whether or not you yield peak performance from the CPU? Don't horse around with 240mm. You want 280mm or 360mm.

If value of parts is a consideration, and it is if we're taking anything less than the 4090 (or AMD's upcoming flagship), then I think it just makes way more sense to consider the i7-13700K, i5-13600K, R7-7700X, R5-7600X, or R7-5800X3D. After all, as I pointed out long ago, when the ark was revealed, the 13700K has the same number of power cores as the 13900K. Disregard the -300MHz peak turbo on the top core. Obviously the 13900K can't sustain that across the top 4-6 cores under peak gaming load. Thermals won't allow it. It is reduced to a paper advantage. Once the 13700K comes out, on a 280mm+ cooler, expect its disadvantage in gaming performance to be nominal.

I don't know what your fascination is with the 13900K, but I gently suggest you consider an alternative strategy for investment of your resources.