-

Xenforo Cloud is upgrading us to version 2.3.8 on Monday February 16th, 2026 at 12:00 AM PST. Expect a temporary downtime during this process. More info here

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Tech Gaming Hardware discussion (& Hardware Sales) thread

- Thread starter Madmick

- Start date

- Joined

- Mar 10, 2009

- Messages

- 11,385

- Reaction score

- 2,738

I would suggest you check your manual, but based on the way those ports are grouped by the printed boxes I would assume you don't mix-and-match. So if you use 1 for your CPU you'd use 3+4 for your video card while leaving 2 unused.

hello friends, I know that 1 is for the CPU cable, is 3 also for cpu?

I need two vga cables for my gpu what should I use?

- Joined

- Oct 4, 2020

- Messages

- 8,952

- Reaction score

- 19,885

I would suggest you check your manual, but based on the way those ports are grouped by the printed boxes I would assume you don't mix-and-match. So if you use 1 for your CPU you'd use 3+4 for your video card while leaving 2 unused.

but it says cpu & gpu. shouldnt be 2 and 4? I threw away the manual

- Joined

- Jun 13, 2005

- Messages

- 66,851

- Reaction score

- 38,964

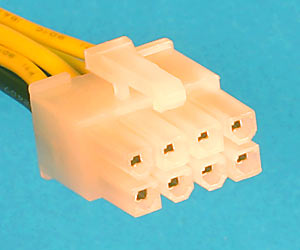

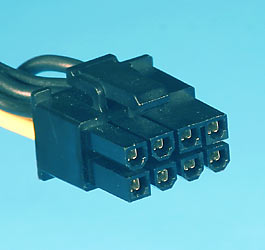

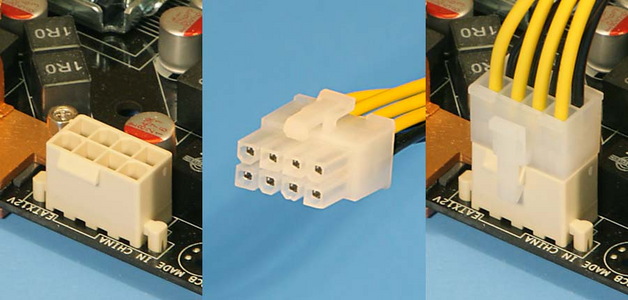

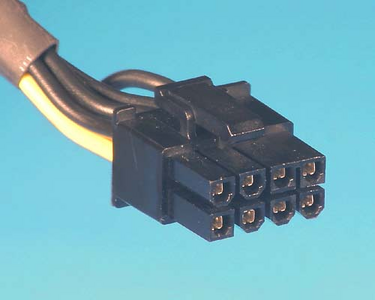

You can use any of them for the CPU or VGA. That's why they're grouped together. Notice they're all identical. Those are 8-pin EPS12V cables.but it says cpu & gpu. shouldnt be 2 and 4? I threw away the manual

Now, the one similar port that you don't want to confuse with those is the PCIe 8-pin. Easiest way is usuaully the color. The plastic that inserts into these PCIe ports is black. The plastic that inserts into the EPS ports above is white.

- Joined

- Oct 4, 2020

- Messages

- 8,952

- Reaction score

- 19,885

You can use any of them for the CPU or VGA. That's why they're grouped together. Notice they're all identical. Those are 8-pin EPS12V cables.

Now, the one similar port that you don't want to confuse with those is the PCIe 8-pin. Easiest way is usuaully the color. The plastic that inserts into these PCIe ports is black. The plastic that inserts into the EPS ports above is white.

Thanks bro. XPG replied to my email and said 1 is for cpu but they ignored the gpu part lol.

- Joined

- Jun 13, 2005

- Messages

- 66,851

- Reaction score

- 38,964

Let me be clear. The EPS is compatible to supply VGA power. VGA is old. Some consider it obsolete, but maybe you have a VGA display. Typically, for data, not power, VGA ports are on the back of motherboards, so you'll be plugging the PSU into the motherboard. If you're talking about the ports that connect directly to a modern GPU, you're probably after the 8-pin PCIe cables I just talked about (the more powerful modern GPUs use similar power cables with even more pins than this, btw). Most modern GPUs don't even include a VGA port, anymore, because nobody is buying an RTX 4060 or an RX 7700 to connect it via VGA to a modern gaming monitor or modern TV. You'll use DisplayPort or HDMI.Thanks bro. XPG replied to my email and said 1 is for cpu but they ignored the gpu part lol.

All about the various PC power supply cables and connectors

All about the various PC power supply cables and connectors

www.playtool.com

vs.

Attachments

- Joined

- Oct 4, 2020

- Messages

- 8,952

- Reaction score

- 19,885

Let me be clear. The EPS is compatible to supply VGA power. VGA is old. Some consider it obsolete, but maybe you have a VGA display. Typically, for data, not power, VGA ports are on the back of motherboards, so you'll be plugging the PSU into the motherboard. If you're talking about the ports that connect directly to a modern GPU, you're probably after the 8-pin PCIe cables I just talked about (the more powerful modern GPUs use similar power cables with even more pins than this, btw). Most modern GPUs don't even include a VGA port, anymore, because nobody is buying an RTX 4060 or an RX 7700 to connect it via VGA to a modern gaming monitor or modern TV. You'll use DisplayPort or HDMI.

View attachment 1028975All about the various PC power supply cables and connectors

All about the various PC power supply cables and connectorswww.playtool.com

vs.

View attachment 1028974

The VGA here means Video Graphics Adapter (PCIe connectors)

- Joined

- Jun 13, 2005

- Messages

- 66,851

- Reaction score

- 38,964

I know. I was clarifying.The VGA here means Video Graphics Adapter (PCIe connectors)

- Joined

- Jan 20, 2004

- Messages

- 34,925

- Reaction score

- 28,118

Intel new SOC's are going to make a case for why do you need a dedicated GPU for lower end PC gaming. There SOC actually closes in on higher end dedicated GPU's. If your not interested in Nvidia rendering or proprietary gaming speed it comes real close and a lot less money for a base level. It comes in apparently around the performance level between 3060Ti to 3080Ti in some level.

Core ultra? It definitely doesn't come in at the performance of a 3060 ti.Intel new SOC's are going to make a case for why do you need a dedicated GPU for lower end PC gaming. There SOC actually closes in on higher end dedicated GPU's. If your not interested in Nvidia rendering or proprietary gaming speed it comes real close and a lot less money for a base level. It comes in apparently around the performance level between 3060Ti to 3080Ti in some level.

- Joined

- Jun 13, 2005

- Messages

- 66,851

- Reaction score

- 38,964

Intel new SOC's are going to make a case for why do you need a dedicated GPU for lower end PC gaming. There SOC actually closes in on higher end dedicated GPU's. If your not interested in Nvidia rendering or proprietary gaming speed it comes real close and a lot less money for a base level. It comes in apparently around the performance level between 3060Ti to 3080Ti in some level.

Yeah, I don't know where PEB gets this clickbait. The flagship of the Core Ultra series is going to be a competitor to the Ryzen Z1 Extreme and Ryzen 7 7840U in the latest Steam Deck competitors. The Core 7 155H is the processor in the MSI Claw A1M that generated all the buzz at CES (I don't really understand why, it's just another in a sea of knock-offs).Core ultra? It definitely doesn't come in at the performance of a 3060 ti.

Intel hasn't told anyone what the TMU or ROP count is, but considering they're built on the same architecture as the Arc cards, I think it will be 64 and 32, respectively. That means on the whole the performance will be more like a GTX 1660, not quite that good, but with much, much slower memory bandwidth because it will use shared LPDDR5 system RAM, not dedicated GDDR5 video RAM.

- Joined

- Jun 13, 2005

- Messages

- 66,851

- Reaction score

- 38,964

Probably not. Not sure.Probably a stupid question but would playing at 60hz on a 100hz (or 120/144/180) monitor look worse than 60hz on a 60hz monitor? It's kind of a pain trying to find a 1440p 60hz monitor.

Interestingly related: I tested limiting my monitor to 60hz for a ported game that was limited to 60hz on PC, anyway. It looked like shit when I did this. Even though the game never renders above 60fps, returning my monitor to its default max framerate in the NVIDIA control panel made all the difference in the world. Obviously once you just let the display run at its native framerate it's fine. All that judder/tearing goes away. But my monitor is also a monitor equally divisible by 60 (ex. 120/240/360/480). Then again, I don't have any 60Hz monitors laying around anymore, so I didn't have a chance to test it on a 60Hz display to compare.

But the 100Hz can't simply double or quadruple the game's 60Hz to a higher, smoother output. There would have to be interpolation, and I don't know what would handle that interpolation. I assume the GPU via hardware acceleration? Because otherwise a workaround would be to use NVIDIA's control panel as it ought to let you choose the display's output. For example, my previous 144Hz monitor could be limited to 120Hz. But with 100Hz, that isn't an option, because it's above 60Hz, but not able to output a clean double 120Hz framerate.

Nevertheless, I still think the issue there was choking the display at a hardware level, not upscaling the game's fps for output. I assume the 100Hz will look smoother than 60Hz despite that it will have to pick and choose which of the frames to insert to get to 100Hz. What I don't think would look good would be to choke the 100Hz to a native 60Hz output.

- Joined

- Jul 4, 2009

- Messages

- 70,092

- Reaction score

- 43,148

I found a basic bitch Acer 100hz 1440p monitor at MC for $150. Looking to get either a 7800 XT or 4070 Super and those aren't gonna get 100 FPS at 1440p Ultra without using FG.Probably not. Not sure.

Interestingly related: I tested limiting my monitor to 60hz for a ported game that was limited to 60hz on PC, anyway. It looked like shit when I did this. Even though the game never renders above 60fps, returning my monitor to its default max framerate in the NVIDIA control panel made all the difference in the world. Obviously once you just let the display run at its native framerate it's fine. All that judder/tearing goes away. But my monitor is also a monitor equally divisible by 60 (ex. 120/240/360/480). Then again, I don't have any 60Hz monitors laying around anymore, so I didn't have a chance to test it on a 60Hz display to compare.

But the 100Hz can't simply double or quadruple the game's 60Hz to a higher, smoother output. There would have to be interpolation, and I don't know what would handle that interpolation. I assume the GPU via hardware acceleration? Because NVIDIA's control panel ought to let you choose the display's output. For example, my previous 144Hz monitor could be limited to 120Hz. But with 100Hz, that isn't an option, because it's above 60Hz, but not able to output a clean double framerate.

Nevertheless, I still think the issue there was choking the display at a hardware level, not upscaling the game's fps for output. I assume the 100Hz will look smoother than 60Hz despite that it will have to pick and choose which of the frames to insert to get to 100Hz. What I don't think would look good would be to choke the 100Hz to a native 60Hz output.

- Joined

- Jun 13, 2005

- Messages

- 66,851

- Reaction score

- 38,964

Does the Acer do Freesync? Because if the 7800 XT or 4070 Super won't get to 100Hz we're talking about a very demanding game. It doesn't sound like the game is hardcapped at 60Hz. It sounds like you just know it's not likely your hardware will run the game at a higher framerate @1440p with the settings you want.I found a basic bitch Acer 100hz 1440p monitor at MC for $150. Looking to get either a 7800 XT or 4070 Super and those aren't gonna get 100 FPS at 1440p Ultra without using FG.

If that's the case, then this is what Freesync/Gsync were created to improve. They really don't matter at higher framerates. That's why the earliest versions just straight up turned off above 144Hz. Their job is to eliminate tearing at lower framerates, and that's where they shine: from 20-144. Even the cheapest monitors from the last several years usually support basic Freesync.

- Joined

- Jul 4, 2009

- Messages

- 70,092

- Reaction score

- 43,148

Yeah it has FreeSync. TLOU1 only averages about 75 FPS at 1440p ultra on either card. Cyberpunk is high 60s.Does the Acer do Freesync? Because if the 7800 XT or 4070 Super won't get to 100Hz we're talking about a very demanding game. It doesn't sound like the game is hardcapped at 60Hz. It sounds like you just know it's not likely your hardware will run the game at a higher framerate @1440p with the settings you want.

If that's the case, then this is what Freesync/Gsync were created to improve. They really don't matter at higher framerates. That's why the earliest versions just straight up turned off above 144Hz. Their job is to eliminate tearing a lower framerates, and that's where they shine: from 20-144. Even the cheapest monitors from the last several years usually support basic Freesync.

- Joined

- Apr 18, 2007

- Messages

- 13,335

- Reaction score

- 6,656

Probably a stupid question but would playing at 60hz on a 100hz (or 120/144/180) monitor look worse than 60hz on a 60hz monitor? It's kind of a pain trying to find a 1440p 60hz monitor.

Only difference would be caused by the panel response time. Which is in the one to three millisecond range that wont be noticeable at 60hz.

Gamers Nexus did a video recently relating to system frame time and input latency. Game that is producing 100 frames per second will have at minimum 36ms input latency.

Last edited:

- Joined

- Jun 13, 2005

- Messages

- 66,851

- Reaction score

- 38,964

Then that's your solution. Unfortunately I can't find a supported framerate range for that monitor (it doesn't show up in the Official List, but that list has never been exhaustive). Even the worst, most limited implementations of Freesync support it in the 40-60Hz range. So worst case scenario you could just cap in-game at 60Hz, and turn on Freesync, and that would look as good. No need to waste your time trying to find an obsolete 60Hz monitor. This is what Freesync is for.Yeah it has FreeSync. TLOU1 only averages about 75 FPS at 1440p ultra on either card. Cyberpunk is high 60s.

*Edit* I'm going to speculate from the specification listing on that Microcenter page that the monitor your considering supports Freesync from 48Hz-75Hz or from 48Hz-100Hz.

- Joined

- Jun 13, 2005

- Messages

- 66,851

- Reaction score

- 38,964

I'm about to just say fuck and get a 4k 60hz monitor that's only $30 more. I'm still content with 60hz and vsync, all this talk and "research" is giving me a headache, dammit!