- Joined

- Apr 18, 2007

- Messages

- 13,211

- Reaction score

- 6,437

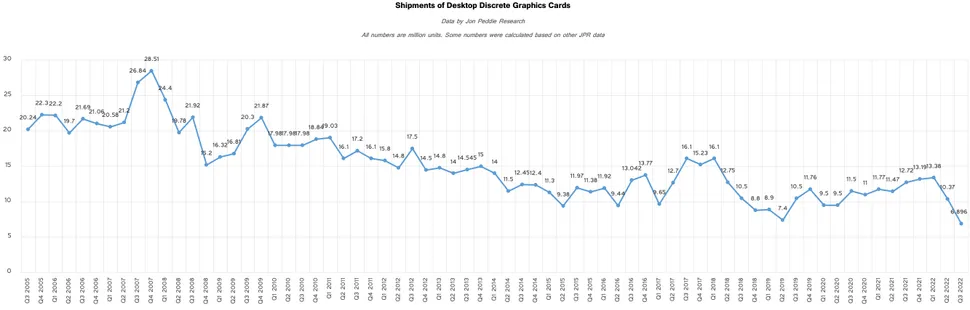

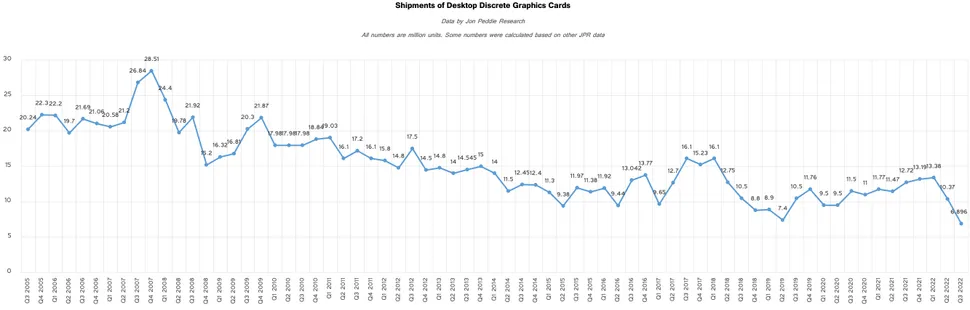

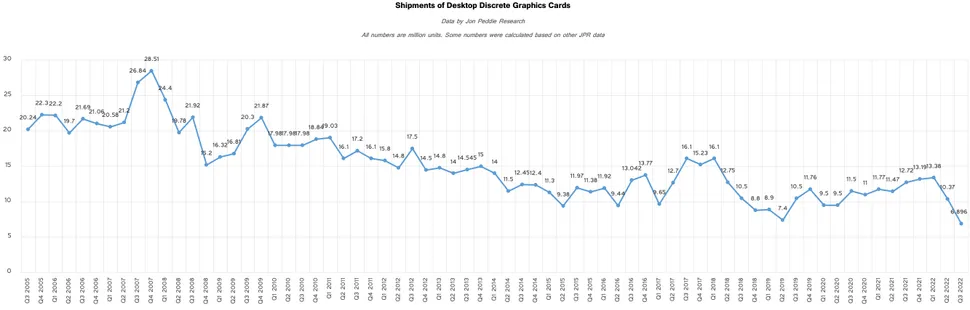

In case anyone hasnt seen. GPU sales in Q3 of 2022 hit a 20 year low.

Hoping the trend continues for Q4 so AMD and Nvidia come back to reality.

I mean I hope so, but I’m not so optimistic that that is what is happening. We did discuss it a few weeks ago in this thread.

In case anyone hasnt seen. GPU sales in Q3 of 2022 hit a 20 year low.

Hoping the trend continues for Q4 so AMD and Nvidia come back to reality.

He pulled it from Tom's Hardware:I mean I hope so, but I’m not so optimistic that that is what is happening. We did discuss it a few weeks ago in this thread.

I’m not sure I trust that graph either. Where did you find it? It’s not even labeled on the side. What is that 0-30 number? Also I am surprised that there is no sales peak at all during the 3000 series run.

I’m surprised because it was an almost 2 year period where you couldn’t find a graphics card anywhere. So the only way yo explain that figure is that they didn’t make more to sell, and I just don’t think that’s true.

This is the situation. GPU sales are down across the board. That's not just true for new cards or flagships. That's all of them. Globally. Despite the crash in prices we've seen post-Crypto. I mentioned this earlier. NVIDIA obviously projected this downturn. That's why they released two new cards at a historically high price, and almost certainly with a historically high profit margin. Knowing that late 2022 would see this downturn, they figured out their best strategy to maximize profit yield was to increase the profit per unit.

This is why stocks are out nearly across the board. Because they aren't producing the same number of xx80 units as with past generations. That was never the strategy. Again, I'm yet to hear how this contradicts the truth that stocks are sold out, selling out far north of MSRP, and as just demonstrated, even selling out rapidly at sampled Microcenter locations. They might be grossly overpriced, but they're moving at that price.

I’m not sure if you’re trying to restart this fight but literally no one ever said Nvidia wasn’t trying to maximize its profits by selling their cards for as much as they thought they could get away with.He pulled it from Tom's Hardware:

https://www.tomshardware.com/news/sales-of-desktop-graphics-cards-hit-20-year-low

Our original dispute stemmed from your objection to my contention that there wasn't an excruciating inability to sell the inventory that NVIDIA is producing. This isn't a fight, I have no emotional attachment to this, don't take this personally. I simpy urged you to focus on hard data, not trending feel-good rumors about how the scalpers are all losing their asses on the RTX 4000 series. Conversely, this more recent chart is an example of Tom's Hardware conveying data. That's why I focused on indications of average pricing, premiums over typical original retail pricing targets (yes, even for AICs), the fact so many retailers were out of stock, and how quickly inventories were turning over.I’m not sure if you’re trying to restart this fight but literally no one ever said Nvidia wasn’t trying to maximize its profits by selling their cards for as much as they thought they could get away with.

They were never out of stock with the 4080s and a bunch of sources were shared for that. I wasn’t following feel good trending stories. It’s known that they have struggled to sell 4080s and continue too.Our original dispute stemmed from your objection to my contention that there wasn't an excruciating inability to sell the inventory that NVIDIA is producing. This isn't a fight, I have no emotional attachment to this, don't take this personally. I simpy urged you to focus on hard data, not trending feel-good rumors about how the scalpers are all losing their asses on the RTX 4000 series. Conversely, this more recent chart is an example of Tom's Hardware conveying data. That's why I focused on indications of average pricing, premiums over typical original retail pricing targets (yes, even for AICs), the fact so many retailers were out of stock, and how quickly inventories were turning over.

It isn't that the 4080 is a market juggernaut. It's going to be move fewer units than even the RTX 2080 did, I suspect. For example, the most significant analysis of hard sales data tech sites like Tom's Hardware shared in previous months was that the 4090 is moving about 4x as many units as the 4080 on eBay. That's a big deal because the 4090, unlike RTX 3000 series cards, is also well north of $1K. This is why even with the premiums above retail pricing I highlighted the scalpers, after eBay takes their cut, are just breaking even. That's why they scalpers have no incentive to sell them, and probably sought to dump them back onto Newegg, likely teh cause resulting in Newegg deciding they weren't interested in selling the same cards twice. Meanwhile, unfortunately, despite its similarly exorbitant pricing, those scalpers are still probably making a modest profit on 4090's, due to a more disproportionate demand relative to supply, and that's why they continue to buy 4090's to re-sell them on resale markets like eBay.

Similarly, I am not optimistic that @KaNesDeath's inference the downturn in sales is about the rising MSRPs is accurate. It's hard to tell. After all, as you can see, the latest crash in sales preceded the launch of the latest GPUs, and the general downward slope persists not just across recent years, but across a whole decade. Further complicating attempts to deduce factors of the sales depression is that the sales throughout the pandemic were constrained by many contributing bottlenecks to raw production. Because, as you mentioned, thanks in large part to the cryptoboom, it wasn't demand that was in a shortage despite the elevated MSRPs. Resale pricing was insane during the pandemic.

Compounding all of this is that, while PC gaming is more popular than ever, the requirements for discrete desktop GPUs to effectively game has never been less relative to mainstream hardware. This was never a small part of the market, either, btw. There were numerous months where the Intel HD series was the most popular "GPU" on the Steam survey 7-8 years ago. Now these iGPUs are more powerful than ever. And they aren't just in office-targeted desktops, they're in the new rising dominant class of casual desktops, which is the "AIO" class (where the computer is just a display with all the parts inside of it). Those AIOs use the same processors as laptops. Meanwhile, gaming laptops have never been more capable, and that isn't shown in his chart. Next, discrete GPUs themselves are aging out more slowly than ever as relevant to gaming minimums. No need to upgrade. Finally, 2020 just saw the release of a new console generation for the first time in 7 years (notice the dip post-2013).

You and I are on the same side. Kane's not wrong, both companies are being greedy, but NVIDIA is being way greedier, and as the market leader, they are the ones who are price-setting. They're in the driver's seat. We all want them to suffer. I'm just no convinced they're losing their ass, here. Unfortunately, there's been some wrenches in the rollout of the 7900 XTX, partly because AMD oversold, and also because the reference cards have had some issues. Because of that, despite the 4080's poor reception, I'm not optimistic the 7900 XTX will become the first AMD competitor to gain a larger share on that Steam survey than its direct competitor.

TLDR, if you want to hold me to a prediction to say "I told you so, Madmick, you were wrong", hold me to that. I believe the 4080 will maintain a larger share on the Steam survey than the 7900 XTX throughout their lives. Fingers crossed that I'm wrong. We're both rooting for the underdog.

We discussed specific variants, and how many of them were out of stock at their original targeted pricing (whether MSRP or a manufacturer-indicated premium above that), which was true across the board at numerous times during that exchange, if you snapshotted them. The point was the 4080 was out of stock at MSRP and its lowest intended pricing at major retailers. This was towards highlighting the larger truth, regarding the relationship of supply to demand, as healthy, but as I pointed out, that's because NVIDIA never intended to furnish as robust a supply. They adapted their strategy to a market that is buying less and less, and has been for a decade.They were never out of stock with the 4080s and a bunch of sources were shared for that.

Guy figured out whats causing the 7900XTX to have performance issues from thermal throttling:

TLDR: Basically a design flaw with its heatsink and or vapor chamber within the heatsink.

Fortunately the AIC variants aren't having that issue. This is why @Slobodan opted to purchase an AIC. Smooth sailing for those buyers with a far superior value than what NVIDIA is offering in its 4080.der8auer is a german engineer well known especially on all things related to overclocking. lets hope this will lead to some redesigning from AMD.

What a bullet dodge lolFortunately the AIC variants aren't having that issue. This is why @Slobodan opted to purchase an AIC. Smooth sailing for those buyers with a far superior value than what NVIDIA is offering in its 4080.

It is a bit funny, too, when you think about it, because it was only with the launch of the RTX 2000 series that the reference designs stopped being a running joke. Prior to that, nobody even looked at the reference cards. They were almost always blower designs of cheapass quality, were awful, and pretty much universally avoided by self-builders.What a bullet dodge lol

Plenty of people bought reference cards before the rtx2000 series. If you’re building a custom loop, you chose a reference card because they’re cheaper.It is a bit funny, too, when you think about it, because it was only with the launch of the RTX 2000 series that the reference designs stopped being a running joke. Prior to that, nobody even looked at the reference cards. They were almost always blower designs of cheapass quality, were awful, and pretty much universally avoided by self-builders.

No, they didn't. Wrong. Reference cards never topped bestseller charts on Amazon, Newegg, or other sellers. In fact, historically, AMD only produced a single batch of reference cards as a one-off release at the launch of the lineup.Plenty of people bought reference cards before the rtx2000 series.

"Plenty of people" right before "custom loop".If you’re building a custom loop, you chose a reference card because they’re cheaper.

Why pay extra for a fancy cooler when you’re going to remove it.

Hoping the system will last me 10 years with a GPU switch after about 6.

Yes, DisplayPort 2.1! We've all been waiting on it, but not for a silly 7860x2160 unit no one can afford. We were waiting on it because DP 2.1 is required for sufficient bandwidth to drive a mere 8-bit (i.e. true SDR color) 3440x1440 ultrawide at 240Hz. If you were ever wondering, which you probably weren't, that's why you've never seen those before. All the gaming 1440p ultrawides on the market have been 100Hz-144Hz, usually, at most 175Hz. Displayport 1.4 couldn't push more. With the arrival of Displayport 2.0/2.1 and HDMI 2.1 on actual GPUs that has finally changed. It's going to usher in a whole new class of ultrawides.Engadget said:The latest Neo G9, which Samsung started teasing in November, has an 8K display with a resolution of 7,860 x 2,160 pixels. Samsung claims it's the first dual UHD mini-LED monitor. It has a 1,000,000:1 contrast ratio and HDR 1000 support, along with a matte display to absorb light and minimize glare. The Neo G9 may be a viable option for high-performance gaming, given its 240Hz refresh rate and 1ms response time. In addition, Samsung says it's the first gaming monitor with DisplayPort 2.1 connectivity as well. The company will reveal more details later, including the all-important price.

No, they didn't. Wrong. Reference cards never topped bestseller charts on Amazon, Newegg, or other sellers. In fact, historically, AMD only produced a single batch of reference cards as a one-off release at the launch of the lineup.

"Plenty of people" right before "custom loop".

Show me the statistics on what percentage of discrete GPUs prior to the RTX 2000 series outfitted with custom aftermarket liquid coolers? Or even today? Spoiler alert: it's as insignificant and niche as those with custom built CPU coolers today versus liquid AIOs (and air coolers). It's a tiny fraction of the market.