Okay,

this makes way more sense. NVIDIA's board partners confirmed the specs, and the 4080 12GB will, in fact, be nerfed in more regards than just VRAM amount:

https://videocardz.com/newz/galax-c...104-400-gpus-for-geforce-rtx-4090-4080-series

However, this begs the question:

why in the hell is NVIDIA calling the 12GB version the "4080"? It makes no sense. Why not just call it the 4070?

And that flips on the light bulb: because

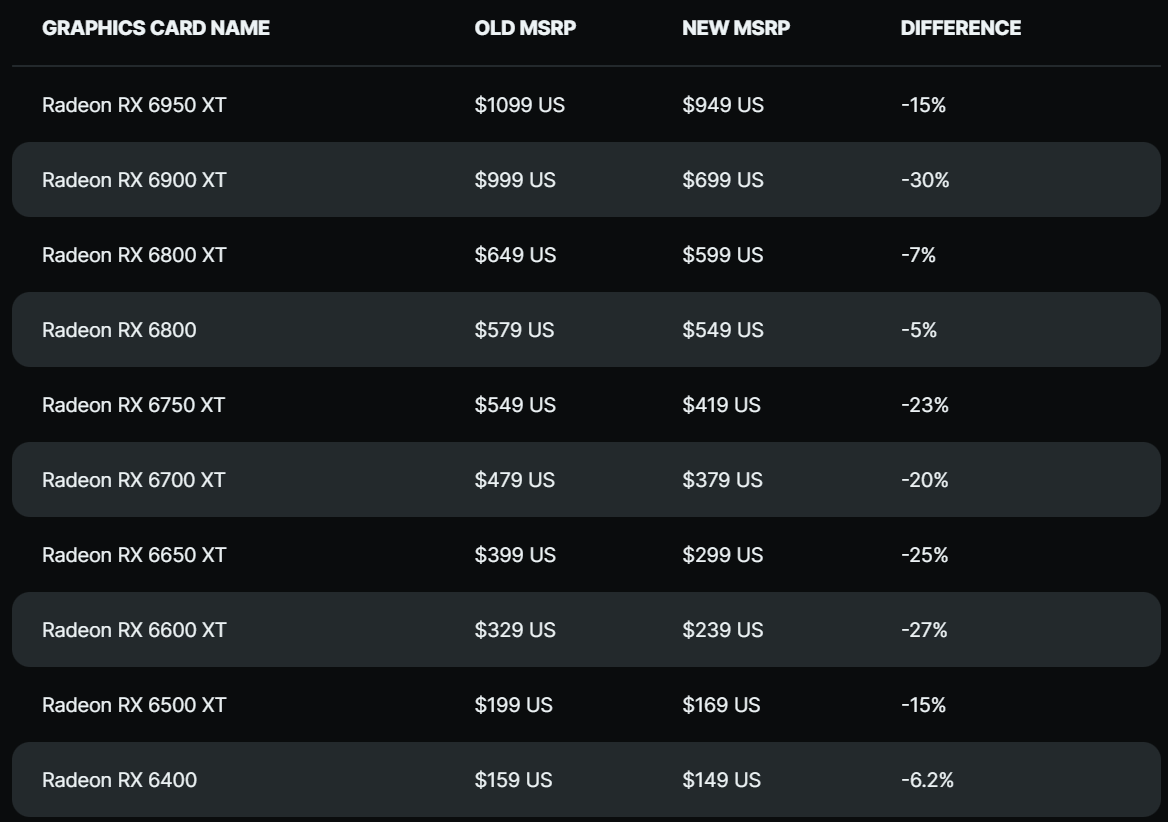

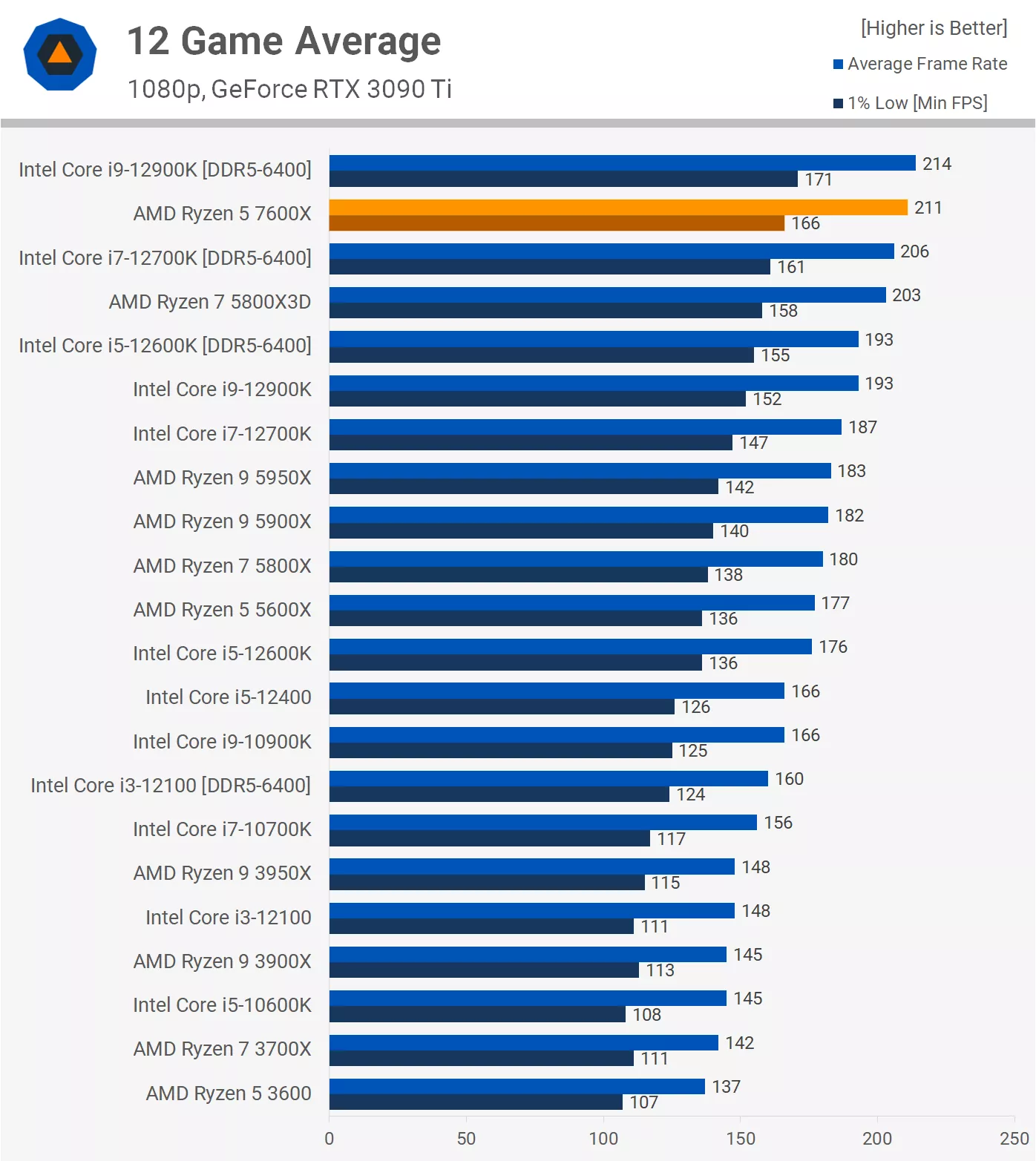

then the whole world would realize the true nature of the price hike they just pulled. They'd notice the 4080 is $1199 when the 3080 released at just $699, when NVIDIA wants people to see the $899 lower end, and think that is the true entry for a 4080, while the variant with more VRAM is some premium, but that isn't true. Because, of course, NVIDIA would be forced to confront the unavoidable truth they just nearly doubled the price on the xx80 line. Meanwhile, the 4070, if more coherently labeled that, at $899, would also raise eyebrows because the RTX 3070's launch MSRP of $499 would similarly stand out against that.

NVIDIA just increased prices on the last gen by 70%-80%, folks. Smack dab in the middle of the cryptocrash.