I did take a decent amount of econ, what I'm laughing at is your complete lack of knowledge when it comes to what AI development and support costs. How does this mythical company make money while charging pennies per client? That barely covers the cost of a query.Because I already explained to you how the economics work. To fight for new customers, new businesses (which run the AIs) will have to compete with other businesses in the same space. To compete they can either offer a better product or lower their costs. It's basic economics, did they not teach you that in economics class?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Law Trump sets up $500 Billion Dollar AI "Project" with Larry Elison

- Thread starter filthybliss

- Start date

- Joined

- Jan 20, 2004

- Messages

- 34,783

- Reaction score

- 27,814

You do realize much of this was being done in specific cases and specifically designed to make it look better? This is why some AI researchers admit that their is a lot of questions before it's ready to replace experts meaning it's not nearly ready for prime time. But Scheme I recommend you elect yourself to be the one they collect blood samples and use you as a guinea pig to advance the technology in the name of science. I seen the supposedly random video by Google Gemini project you can bet these where prepared.It has already been shown to perform better than real doctors in a lot of aspects, like some diagnostics, classifications and for generic health stuff. In 20 years with the way society and technology is progressing, it'll likely be better than a doctor in almost all aspects and cost pennies. How is that not a potential solution?

- Joined

- Feb 4, 2013

- Messages

- 9,360

- Reaction score

- 11,340

You forgot that Moore's law does exist and this amount of AI funding and research is going to hyper-accelerate the capabilities of AI. The cost-to-power ratio of tech has been on a downward trend for the history of technology. Maybe because the smartest people in the world are getting paid millions to solely work on improving the AI and the costs, especially right now. The whole stock market is pumping AI into everything, every business is researching and actively putting AI into their products. Do you really think the current level of AI is going to be all that it is in 25 years? Everything will improve with AI and at a much needed rapid pace. Why are you so negative of something that could be so helpful to the world?I did take a decent amount of econ, what I'm laughing at is your complete lack of knowledge when it comes to what AI development and support costs. How does this mythical company make money while charging pennies per client? That barely covers the cost of a query.

I work in tech and actively work with Copilot and Codeium extensions that auto-recommend code and generate code for you. This shit is already better at programming, (not only programming itself) than 50% of the people in the workforce by far. And if we're talking about breadth of technologies, probably more just than any human alive. These things can already code way better than the average human. And coding is literally their own existence. These things will be used recursively on themselves at many levels and eventually improve. This much is almost a certainty at this point. You can look at Huang's law as well, the performance of GPUs is growing at an exponential rate to their processing power which is what is powering these chips (or similar technologies in AI-specific chips).

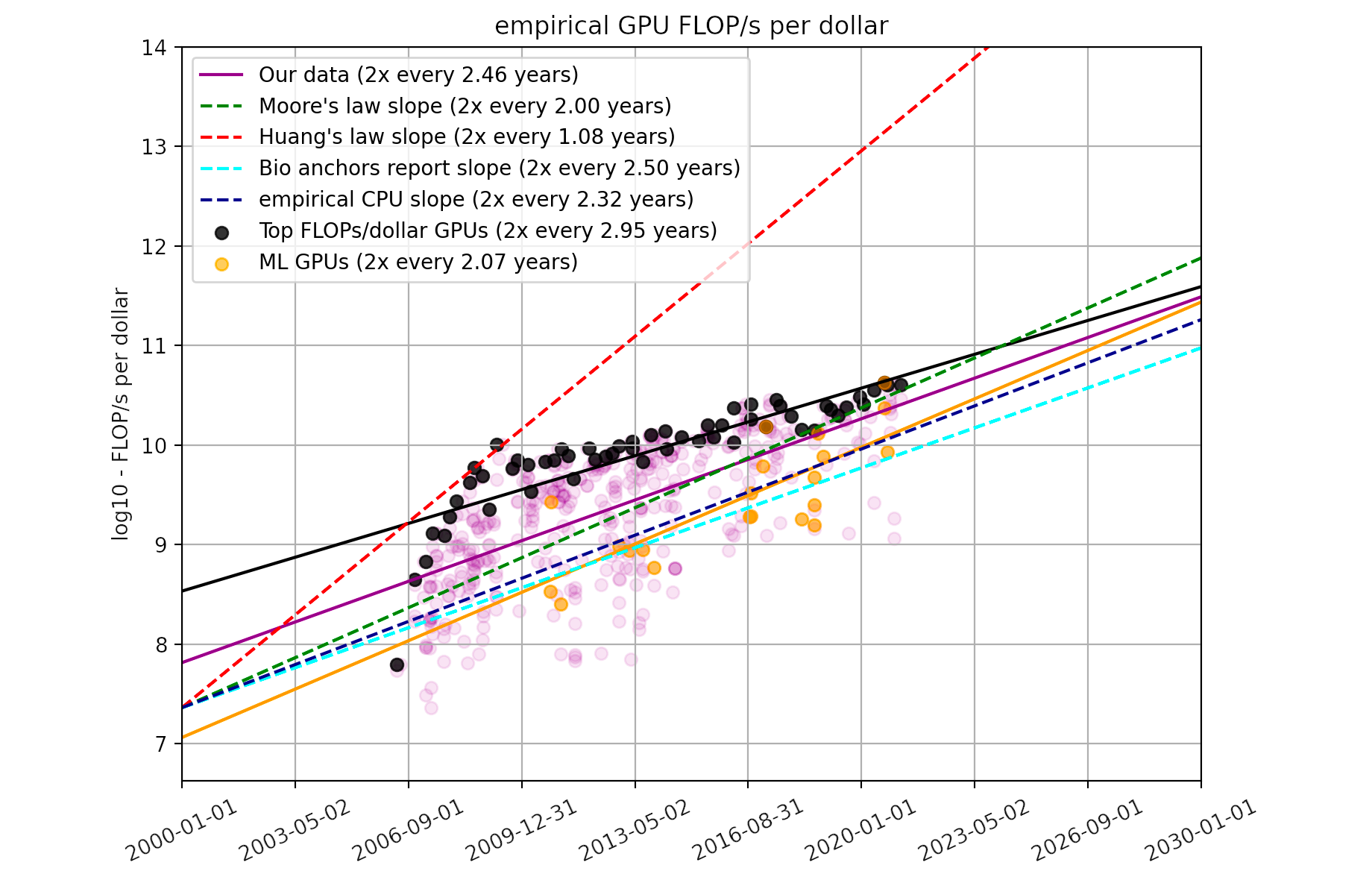

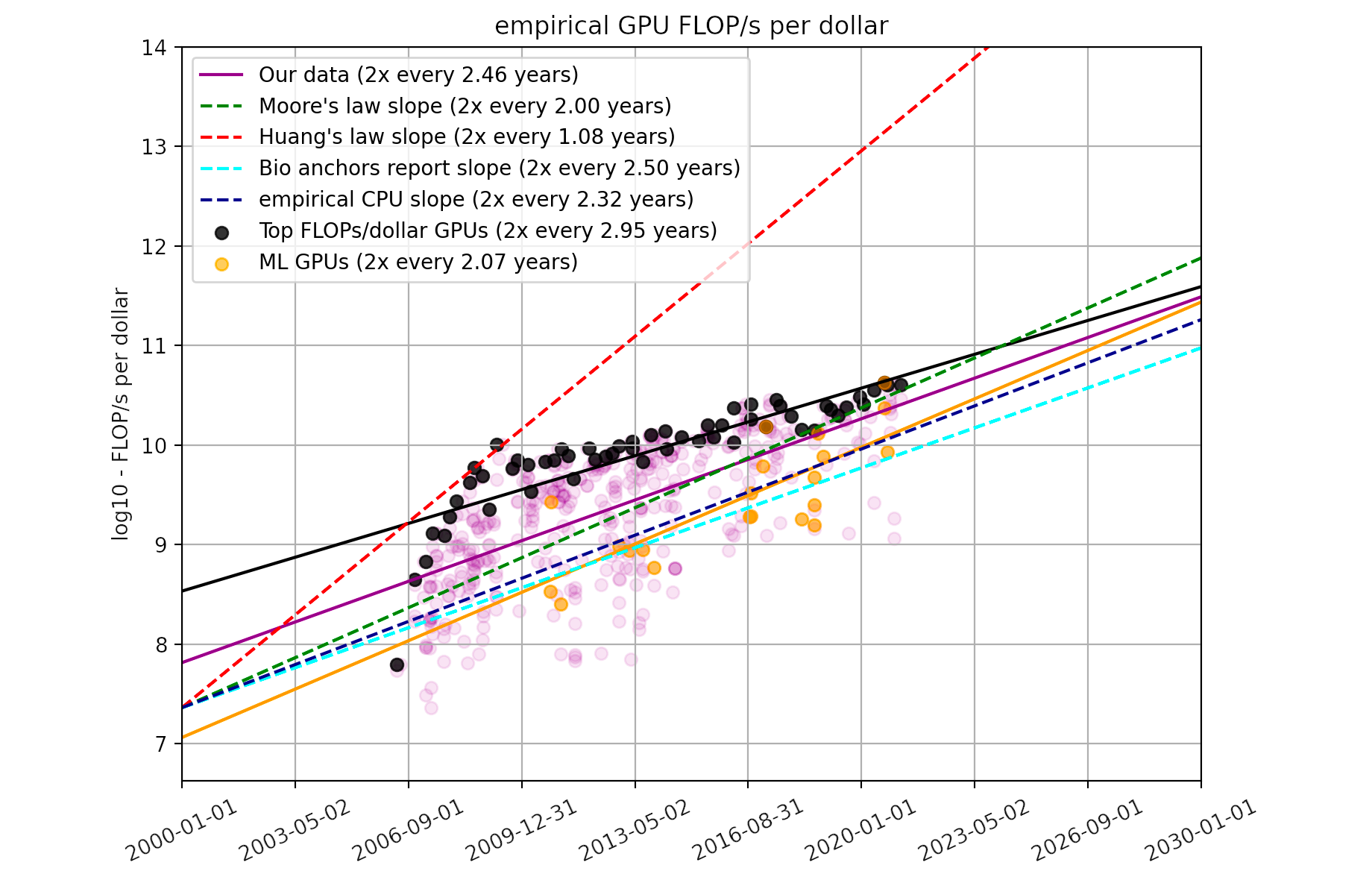

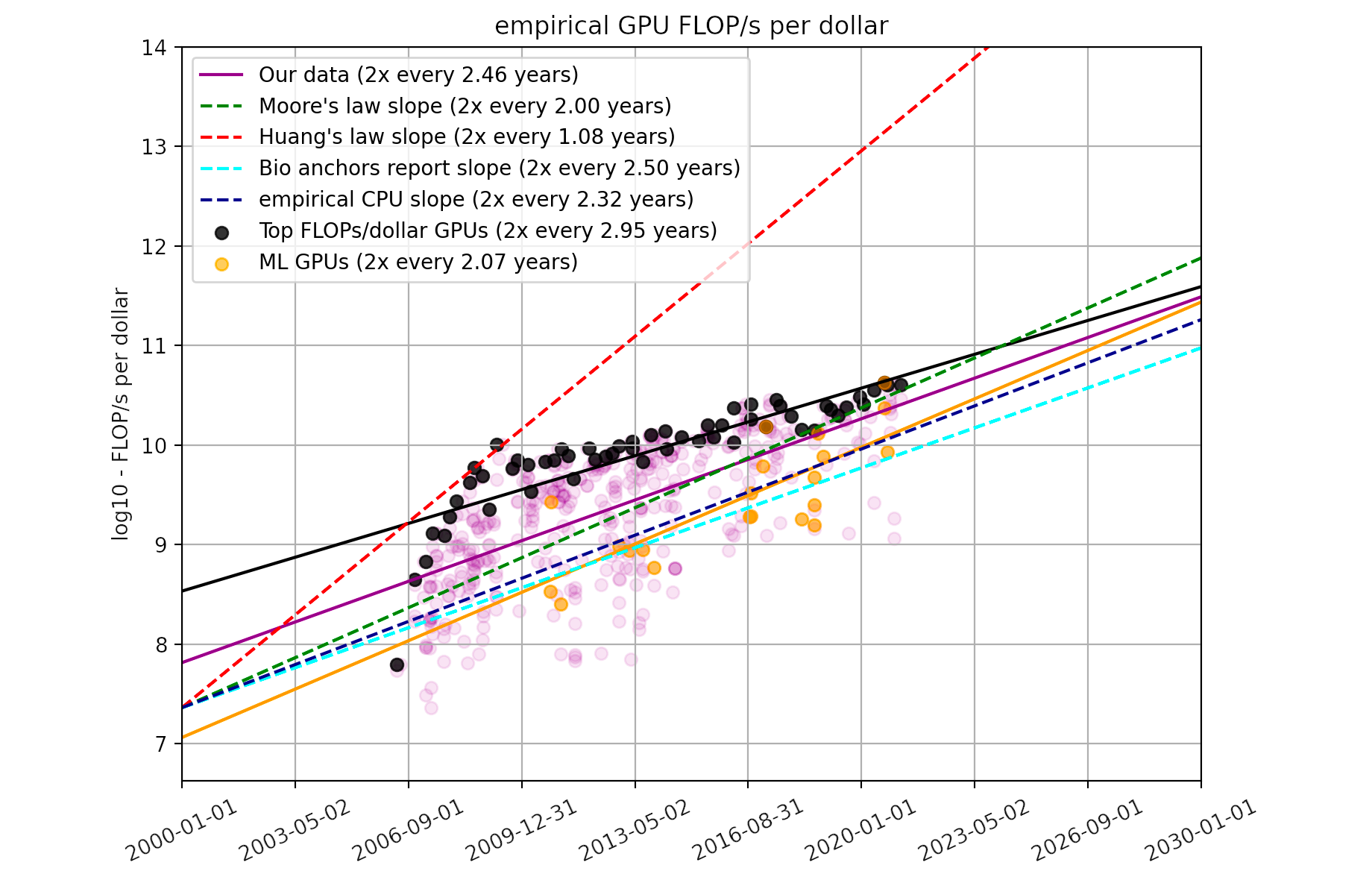

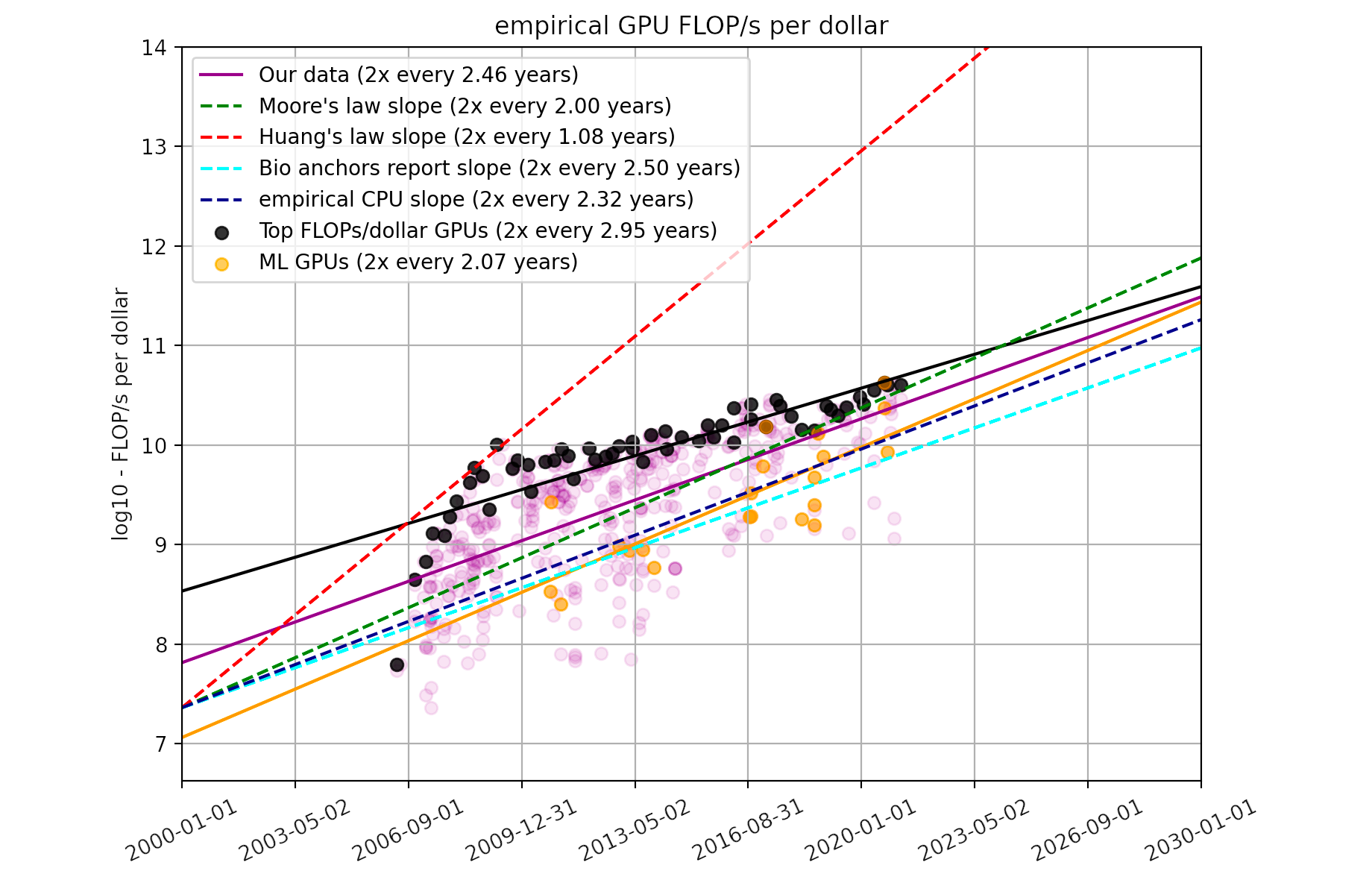

Trends in GPU Price-Performance

Improvements in hardware are central to AI progress. Using data on 470 GPUs from 2006 to 2021, we find that FLOP/s per dollar doubles every ~2.5 years.

OpenAI has been working on this since at least last year long before the election. There were leaks in early 2024. Back then the speculation was that Microsoft was going to be the major investor and they were throwing around a $100 billion figure.

OpenAI's relationship with Microsoft has soured since then and both companies have been working towards a divorce where they are less dependent on the other (Microsoft on OpenAI's models and OpenAI on Microsoft's data center). This just appears to be the result of that. Sam Altman replacing his old sugar daddy Nadella with Ellison and Masayoshi.

As far as I can tell, the government isn't fronting any money. It's all private investment. And the AP article used the weasel words "up to $500 billion" so it's likely the actual investment falls far below that number. Everyone's favorite Nazi, Elon Musk, has been saying that they don't have $500 billion on Twitter since the announcement and he might actually be telling the truth for a change.

There was no reason for this to be announced at the Whitehouse. Literally done just so Trump can take credit for something he had nothing to do with (and if it fails, I'm sure he'll very quickly disassociate himself). Doing something that has nothing to do with Trump and then claiming that "it wouldn't have been possible without Trump" is standard practice. That's just the new normal now.

OpenAI's relationship with Microsoft has soured since then and both companies have been working towards a divorce where they are less dependent on the other (Microsoft on OpenAI's models and OpenAI on Microsoft's data center). This just appears to be the result of that. Sam Altman replacing his old sugar daddy Nadella with Ellison and Masayoshi.

As far as I can tell, the government isn't fronting any money. It's all private investment. And the AP article used the weasel words "up to $500 billion" so it's likely the actual investment falls far below that number. Everyone's favorite Nazi, Elon Musk, has been saying that they don't have $500 billion on Twitter since the announcement and he might actually be telling the truth for a change.

There was no reason for this to be announced at the Whitehouse. Literally done just so Trump can take credit for something he had nothing to do with (and if it fails, I'm sure he'll very quickly disassociate himself). Doing something that has nothing to do with Trump and then claiming that "it wouldn't have been possible without Trump" is standard practice. That's just the new normal now.

- Joined

- Jan 15, 2009

- Messages

- 28,779

- Reaction score

- 25,971

When did y’all become Marxists?

I get what you’re saying but there is also the threat of it replacing the jobs for blue collar workers. Also, it can be used maliciously like Ellison insinuated with spying or deep fake porn which is already thing…

Like they say with great power comes great responsibility.

AI is literally replacing white collar jobs. Blue collar is not going to be replaced as fast. It is infinitely easier to make an AI lawyer than an AI plumber.

I did not, rather you forgot that Moore's law died about two decades ago, depending on how you're calculating it. Improvements have continued but at a more incremental pace. There's a reason packaging is the next big breakthrough, not density.You forgot that Moore's law does exist and this amount of AI funding and research is going to hyper-accelerate the capabilities of AI. The cost-to-power ratio of tech has been on a downward trend for the history of technology. Maybe

I'm not negative on there being some use for AI models in health care. I'm pointing out it's ignoring that right now there are several countries that outperform the US in this field, and rather than trying to lean on AI gimmicks we can just learn from them. You're trying to slap on a coat of paint when the car needs a new engine.Why are you so negative of something that could be so helpful to the world?

I do too, except I work on the manufacturer and channel side, so I see how dogshit Copilot+ sales have been and that even Apple is freaking out trying to figure out how to get people to care about Apple Intelligence. I've been over this in several other threads: lots of cool stuff and noticeable advancements on the horizon, but aboslutely no one has figured out how to monetize AI.I work in tech and actively work with Copilot and Codeium extensions that auto-recommend code and generate code for you.

That's why you're claim that AI companies will only charge a couple cents per patient is insane. Either you think companies are in this for charity or you have no concept of what developing AI products and training models costs.

They are not. Again, you don't seem to understand the basics of semiconductor design or manufacturing, or the economics of it. Blackwell is a clear example of these slowing advances, whether you look at consumer or datacenter. Part of the problem here is that you are treating Moore and Huang's laws as actual laws of science. They are not, they are observations that were then translated into design goals.You can look at Huang's law as well, the performance of GPUs is growing at an exponential rate to their processing power which is what is powering these chips (or similar technologies in AI-specific chips).

Word of the wise: If you honestly think Moore's Law is still alive, you are ill equipped to discuss AI advances.

- Joined

- Dec 14, 2020

- Messages

- 5,498

- Reaction score

- 8,138

Did Elon throw a tantrum about this?Larry Ellison looks kinda weird but is in pretty good shape for 80. Also has a 33 year old wife.

Anyway...it was interesting watching Elon throw a tantrum over this. I wonder if he'll last even 1 Scaramucci?

It will be interesting watching him battle Trump. Elon cuts 5 billion, Trump adds 7 billion to the deficit.

- Joined

- Apr 18, 2007

- Messages

- 13,143

- Reaction score

- 6,362

There was no reason for this to be announced at the Whitehouse. Literally done just so Trump can take credit for something he had nothing to do with (and if it fails, I'm sure he'll very quickly disassociate himself). Doing something that has nothing to do with Trump and then claiming that "it wouldn't have been possible without Trump" is standard practice. That's just the new normal now.

Yup.

- Joined

- Jun 18, 2006

- Messages

- 80,069

- Reaction score

- 120,539

Did Elon throw a tantrum about this?

It will be interesting watching him battle Trump. Elon cuts 5 billion, Trump adds 7 billion to the deficit.

Not a tantrum at all, but Musk did criticize it.

Why Elon Musk is fuming over Donald Trump’s $500 billion AI Stargate Project

A new $500 billion AI initiative, Stargate, was announced by US President Donald Trump, SoftBank, OpenAI, and Oracle, promising to create over 100,000 jobs. However, Elon Musks doubts about the project's funding and his ongoing rivalry with OpenAIs Sam Altman sparked public disputes. As...

EDIT put in a few different articles

- Joined

- Feb 4, 2013

- Messages

- 9,360

- Reaction score

- 11,340

Go ahead and short the stock market and any AI companies then. Feel free to post your AI and tech shorts in 25 years if you think that it's so over hyped, you could make a ton of money shorting all the companies that are researching AI in healthcare. Especially NVIDIA, their whole company's worth rests on how Moore's law and AI plays out. Feel free to post proof here.I did not, rather you forgot that Moore's law died about two decades ago, depending on how you're calculating it. Improvements have continued but at a more incremental pace. There's a reason packaging is the next big breakthrough, not density.

I'm not negative on there being some use for AI models in health care. I'm pointing out it's ignoring that right now there are several countries that outperform the US in this field, and rather than trying to lean on AI gimmicks we can just learn from them. You're trying to slap on a coat of paint when the car needs a new engine.

I do too, except I work on the manufacturer and channel side, so I see how dogshit Copilot+ sales have been and that even Apple is freaking out trying to figure out how to get people to care about Apple Intelligence. I've been over this in several other threads: lots of cool stuff and noticeable advancements on the horizon, but aboslutely no one has figured out how to monetize AI.

That's why you're claim that AI companies will only charge a couple cents per patient is insane. Either you think companies are in this for charity or you have no concept of what developing AI products and training models costs.

They are not. Again, you don't seem to understand the basics of semiconductor design or manufacturing, or the economics of it. Blackwell is a clear example of these slowing advances, whether you look at consumer or datacenter. Part of the problem here is that you are treating Moore and Huang's laws as actual laws of science. They are not, they are observations that were then translated into design goals.

Word of the wise: If you honestly think Moore's Law is still alive, you are ill equipped to discuss AI advances.

Price to performance has literally gone down for the entirety of human history. If you think you're smart enough then go bet on it. Otherwise stop talking shit like you know so much because the entire industry is disagreeing with you and investing unlike ever before into AI and the technology backing it. Reaearchers are already looking at sub-1nm transistor sizes and have a roadmap of going below 1nm in place for the next decade. And that's not all, they are also looking at better ways of chip design (like chiplets), quantum chips and AI tailored chips. And we don't know what new strategies all this AI demand and research is going to unveil either.

You conveniently ignored my link that shows hard data (from 2006 to 2021, before all the AI investment) backing my exact assertion and instead just said I was wrong (good argument). You also ignored the fact that AI-specific chips are being made to also boost performance and lower costs over traditional GPUs, which again the data set I linked to shows is exponentially increasing.

Why don't you educate me as such a self-proclaimed expert who knows more than human history, price performance datasets and every major company, what will price to performance ratios look like in 25 years? If you think it'll flatline, post when you think it will stop progressing. I'll bump this thread in a couple years when it's already surpassed it by then if I remember. And post your shorts of every chip manufacturer, every AI hyped up company, etc. and make billions since you are so confident of your statement that Moores law (which again, I provided a link showing the hard dataset of GPU price performance doubling every 2.5 years and AI-specific GPUs double every 2.06 years, almost exactly Moore's law).

Its not even just hardware advances. The models make advances too. They have become more efficient over time. The latest such example of this is DeepSeek V3. They released a distilled 32 billion parameter open weight model that trades punches with OpenAI o1 (which currently costs $200/month). The 4 bit quantized version of DeepSeek V3 32B can run on a RTX 4090 for inference. Thats basically running the equivalent of a SOTA frontier model on commodity hardware. Something that was impossible 3 weeks ago.

And the really crazy thing is that DeepSeek V3's distilled 1.5 billion parameter model performs in the same ballpark as GPT-4o and Claude 3.5. And that model is small enough to run inference on a CPU with decent performance (and great performance on a $200 GPU with 8GB VRAM).

And the really crazy thing is that DeepSeek V3's distilled 1.5 billion parameter model performs in the same ballpark as GPT-4o and Claude 3.5. And that model is small enough to run inference on a CPU with decent performance (and great performance on a $200 GPU with 8GB VRAM).

Again, Moore's Law stopped about two decades ago. That you are still blathering on about it shows a woeful lack of knowledge on the subject.Especially NVIDIA, their whole company's worth rests on how Moore's law and AI plays out. Feel free to post proof here.

This will continue, but at a slower pace. There are several AI specific breakthroughs that still need to happen. Again, you're the one claiming that we can get the cost of using AI to diagnose patients down to pennies, which is laughable math. The cost of just a bare bones google search already matches that, let alone a basic Chatgpt query.Price to performance has literally gone down for the entirety of human history. If you think you're smart enough then go bet on it.

Do you not read your own sources? Huang's law is that AI per/dollar more than double every 2 years. That research you linked only found double the perf/dollar every 2.5 years, and a lot of those gains were due to Moore's law (GPUs run on older nodes) and other node advances (finfet is a big one).You conveniently ignored my link that shows hard data (from 2006 to 2021, before all the AI investment) backing my exact assertion and instead just said I was wrong (good argument).

It will defintely not look like pennies per diagnosis of each patient, which was your original ludicrous claim. It'd be more comparable to the cost of enterprise AI for the hospital (aka hundreds of dollars per seat per month).Why don't you educate me as such a self-proclaimed expert who knows more than human history, price performance datasets and every major company, what will price to performance ratios look like in 25 years?

Why would I short the companies that pay me for help on these matters? Let's just make this simple since you keep blathering on about Moore's law. When was the last time Apple silicon followed Moore's law? And once again you are claiming that Huang's law is a thing despite not even understanding the definition of it.And post your shorts of every chip manufacturer, every AI hyped up company, etc. and make billions since you are so confident of your statement that Moores law (which again, I provided a link showing the hard dataset of GPU price performance doubling every 2.5 years and AI-specific GPUs double every 2.06 years, almost exactly Moore's law).

- Joined

- Feb 4, 2013

- Messages

- 9,360

- Reaction score

- 11,340

Tired of arguing with someone that doesn't know what they are talking about. Are you sure you work in the industry? Here I'll just post actual data rather than "trust me bro" idiocy you continue to post.Again, Moore's Law stopped about two decades ago. That you are still blathering on about it shows a woeful lack of knowledge on the subject.

This will continue, but at a slower pace. There are several AI specific breakthroughs that still need to happen. Again, you're the one claiming that we can get the cost of using AI to diagnose patients down to pennies, which is laughable math. The cost of just a bare bones google search already matches that, let alone a basic Chatgpt query.

Do you not read your own sources? Huang's law is that AI per/dollar more than double every 2 years. That research you linked only found double the perf/dollar every 2.5 years, and a lot of those gains were due to Moore's law (GPUs run on older nodes) and other node advances (finfet is a big one).

It will defintely not look like pennies per diagnosis of each patient, which was your original ludicrous claim. It'd be more comparable to the cost of enterprise AI for the hospital (aka hundreds of dollars per seat per month).

Why would I short the companies that pay me for help on these matters? Let's just make this simple since you keep blathering on about Moore's law. When was the last time Apple silicon followed Moore's law? And once again you are claiming that Huang's law is a thing despite not even understanding the definition of it.

Using a dataset of 470 models of graphics processing units (GPUs) released between 2006 and 2021, we find that the amount of floating-point operations/second per $ (hereafter FLOP/s per $) doubles every ~2.5 years. For top GPUs at any point in time, we find a slower rate of improvement (FLOP/s per $ doubles every 2.95 years), while for models of GPU typically used in ML research, we find a faster rate of improvement (FLOP/s per $ doubles every 2.07 years). GPU price-performance improvements have generally been slightly slower than the 2-year doubling time associated with Moore’s law, much slower than what is implied by Huang’s law, yet considerably faster than was generally found in prior work on trends in GPU price-performance. We aim to provide a more precise characterization of GPU price-performance trends based on more or higher-quality data, that is more robust to justifiable changes in the analysis than previous investigations.<a href="https://epoch.ai/blog/trends-in-gpu-price-performance#fn:a" rel="footnote">1</a>

The data from 2006 to 2021 (two decades ago) says you are incorrect, price-performance has doubled every 2.07 years for GPUs that are used in machine learning, on par with Huang's law. And this is before the AI investment craze that started in 2021.

To think that it already codes better as an extension of my VS Code than you should probably let you know about the price reductions of this software (it replaces medium-level software engineers and runs on my laptop for pennies). But for some reason you refuse to look at historical data, current data, amount of investment capital being poured in and draw a reasonable conclusion. There's not much point in me wasting my time arguing, I'll continue working in AI and making useful stuff and you can enjoy the fruits of my labour as well: that's what's great about it. Even idiots like yourself can capitalize on what actual intelligent people are working on.

- Joined

- Mar 4, 2024

- Messages

- 8,035

- Reaction score

- 16,883

Tired of arguing with someone that doesn't know what they are talking about. Are you sure you work in the industry? Here I'll just post actual data rather than "trust me bro" idiocy you continue to post.

The data from 2006 to 2021 (two decades ago) says you are incorrect, price-performance has doubled every 2.07 years for GPUs that are used in machine learning, on par with Huang's law. And this is before the AI investment craze that started in 2021.

To think that it already codes better as an extension of my VS Code than you should probably let you know about the price reductions of this software (it replaces medium-level software engineers and runs on my laptop for pennies). But for some reason you refuse to look at historical data, current data, amount of investment capital being poured in and draw a reasonable conclusion. There's not much point in me wasting my time arguing, I'll continue working in AI and making useful stuff and you can enjoy the fruits of my labour as well: that's what's great about it. Even idiots like yourself can capitalize on what actual intelligent people are working on.

You remind me of a republican JVS. It might just be the pfp.

- Joined

- Sep 25, 2008

- Messages

- 18,313

- Reaction score

- 10,632

I did not, rather you forgot that Moore's law died about two decades ago, depending on how you're calculating it. Improvements have continued but at a more incremental pace. There's a reason packaging is the next big breakthrough, not density.

I'm not negative on there being some use for AI models in health care. I'm pointing out it's ignoring that right now there are several countries that outperform the US in this field, and rather than trying to lean on AI gimmicks we can just learn from them. You're trying to slap on a coat of paint when the car needs a new engine.

I do too, except I work on the manufacturer and channel side, so I see how dogshit Copilot+ sales have been and that even Apple is freaking out trying to figure out how to get people to care about Apple Intelligence. I've been over this in several other threads: lots of cool stuff and noticeable advancements on the horizon, but aboslutely no one has figured out how to monetize AI.

That's why you're claim that AI companies will only charge a couple cents per patient is insane. Either you think companies are in this for charity or you have no concept of what developing AI products and training models costs.

They are not. Again, you don't seem to understand the basics of semiconductor design or manufacturing, or the economics of it. Blackwell is a clear example of these slowing advances, whether you look at consumer or datacenter. Part of the problem here is that you are treating Moore and Huang's laws as actual laws of science. They are not, they are observations that were then translated into design goals.

Word of the wise: If you honestly think Moore's Law is still alive, you are ill equipped to discuss AI advances.

Moore’s law was revised in the 70s. Moore’s law is simply an observational projection of the historical trend.

Jesus Christ dude lol. From your very own source: "GPU price-performance improvements have generally been slightly slower than the 2-year doubling time associated with Moore’s law, much slower than what is implied by Huang’s law, yet considerably faster than was generally found in prior work on trends in GPU price-performance."Tired of arguing with someone that doesn't know what they are talking about. Are you sure you work in the industry? Here I'll just post actual data rather than "trust me bro" idiocy you continue to post.

Again, Huang's law doesn't exist, those advances are primarily due to Moore's law, which GPU's reaped the benefits of for longer due to running on later nodes, Finfet, and architectural boosts.

What enterprise software costs pennies to run and can code well? You seem to be confusing submarket pricing for actual pricing, and Iol if you think OpenAI or competitors are never going to raise pricing. It may still end up cheaper than an actual engineer, but sooner than later costs will have to go up to cover the amount of bleeding.To think that it already codes better as an extension of my VS Code than you should probably let you know about the price reductions of this software (it replaces medium-level software engineers and runs on my laptop for pennies).

Yup, it's never been a real law and it's been revised down multiple times over the years. Actual laws of science are why density slowed down.Moore’s law was revised in the 70s. Moore’s law is simply an observational projection of the historical trend.

Latest posts

-

-

-

-

-

2026 Its a long way to the top if you want to rock and roll log!

- Latest: Devil's_Advocate