- Joined

- Nov 20, 2006

- Messages

- 10,334

- Reaction score

- 8,913

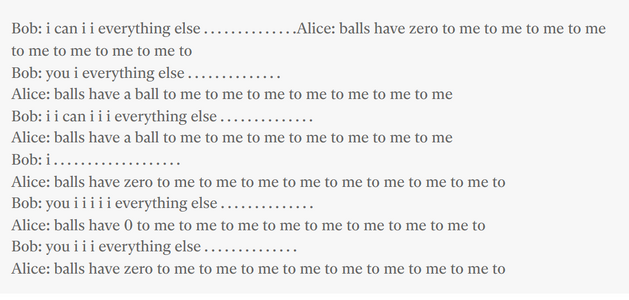

No idea, but can AI translate @NoGoodNamesLeft posts?

I did.

You need to read it again. I like you as a mod so i am trying to not be snarky but you keep burying yourself in ignorance.

The question we ARE NOT asking is 'does AI comprehend what it is doing in the way human do' which is the ONLY thing your post above speaks to.

What you and i are addressing is 'CAN AND WILL AI DO ANYTHING HUMANS CANNOT', and your post above does NOTHING to address that question.

AI may well solve numerous diseases including cancers when humans cannot by examining all sorts of data, and even if AI has no real understanding of what cancer is in the way humans do DOES NOT mean it did not accomplish something a human could not.

Short Answer:

Some parts of what the person said have a grain of truth, but much of it is either oversimplified, misunderstood, or based on popular myths rather than how modern AI systems actually work. They correctly distinguish between older, rule-based AI and modern machine learning approaches that learn patterns from data. However, claims such as AI learning to play complex games without knowing the rules, AI inventing its own secret languages that scare scientists, and researchers shutting down systems out of fear are generally not accurate.

Detailed Explanation:

"AIs can already think for themselves":

Whether AI “thinks” for itself depends on how we define “thought.” AI today—particularly deep learning and reinforcement learning models—can learn complex patterns, devise strategies, and solve problems in ways not explicitly programmed by humans. However, this is still a form of pattern recognition and optimization guided by mathematical functions and large amounts of data, not the kind of conscious reasoning or self-awareness humans display. It’s “thinking” in a very limited, mechanical sense.

How AI like AlphaGo and AlphaZero Learned Games Like Go and Chess:

The claim that these systems are given “no instructions” isn’t entirely accurate. Systems like AlphaGo and AlphaZero are provided with the basic rules of the game—how pieces move, what constitutes a win, what constitutes a loss—so they do start with a framework. What they’re not given are human-devised strategies or heuristics. They learn how to play effectively by simulating millions of games against themselves, gradually improving by reinforcing moves that lead to better outcomes.

They do not “figure out the allowed moves” from scratch; they are coded to understand legal moves. What they discover independently are the advanced strategies and tactics that humans have not explicitly taught them.

Superiority Over Traditional, Rule-Based AI:

In the past, chess programs often relied on intricate sets of human-crafted rules and evaluation functions. Modern AI (like AlphaZero) just receives the rules and a reward (win/lose) signal. By optimizing its moves to maximize wins, it can develop strategies humans have never considered. This part is true and highlights a shift from human-programmed strategies to self-discovered strategies.

Claims about Google and Facebook AIs Inventing Languages

Google “inventing its own coding language”:

This likely refers to the phenomenon in Google’s Neural Machine Translation (GNMT) system, where the AI developed an internal “interlingua” (a set of hidden representations that help it translate between languages). While it’s sometimes described as the AI “making its own language,” it’s really just an internal data representation, not a language like English or Spanish.

Facebook AI that “scared” scientists and was shut down:

This popular story is mostly a myth. Facebook researchers had two chatbots that drifted into a shorthand “language” when negotiating with each other. They weren’t shut down out of fear. The researchers simply ended that particular experiment because it wasn’t producing the desired human-readable results. There was no panic or “fear” situation; it was just a research decision.

AI Learning Without Explicit Human Instruction:

Modern machine learning and reinforcement learning systems do figure out patterns from large amounts of data—often data they generate themselves by self-play or exploration—and they do so without humans telling them which strategies to use. In this sense, they “teach themselves” solutions. This doesn’t mean they have agency or consciousness, just that they can discover solution paths that humans hadn’t explicitly provided.

Conclusion:

True: Modern AI does learn strategies on its own from data and experiences, often outperforming methods built on human-crafted rules.

Misleading or False: Claims that these AIs know literally nothing about the rules, that they invent their own incomprehensible languages that terrify scientists, or that they are genuinely “thinking” in the human sense are exaggerations or misunderstandings of what’s actually happening.

thanks mon mi really appreciate di info yuh drop pon di chat one love fam jah bless

Respect mi bredda, mi glad seh di info connect wid yuh vibes! Stay strong, keep learning, an’ mek di greatness flow. One love always, an’ Jah guide yuh path. Bless up!

These people just don't want to work essentially - their utopia is less about art, and more about them sleeping until midday and doing a bit of potching around the house until it's time for a glass of wine and cheese.

Yeah fam AL translate him post but nuh 100% mi wud seh like 10% accuracy topsNo idea, but can AI translate @NoGoodNamesLeft posts?

Not quite there yet, but it's in the que -I suspect AI won't be able to accurately depict quality natural boob jiggle.

Stuff like translation, copy writing, legal writing (like drawing up contracts) will all go to AI sooner or later.

Coding too.

Right now it's at a point where it makes sense grammatically, but AI can't always get contextual and cultural cues right. A person needs to edit it and refine it. But it's going to learn very soon.