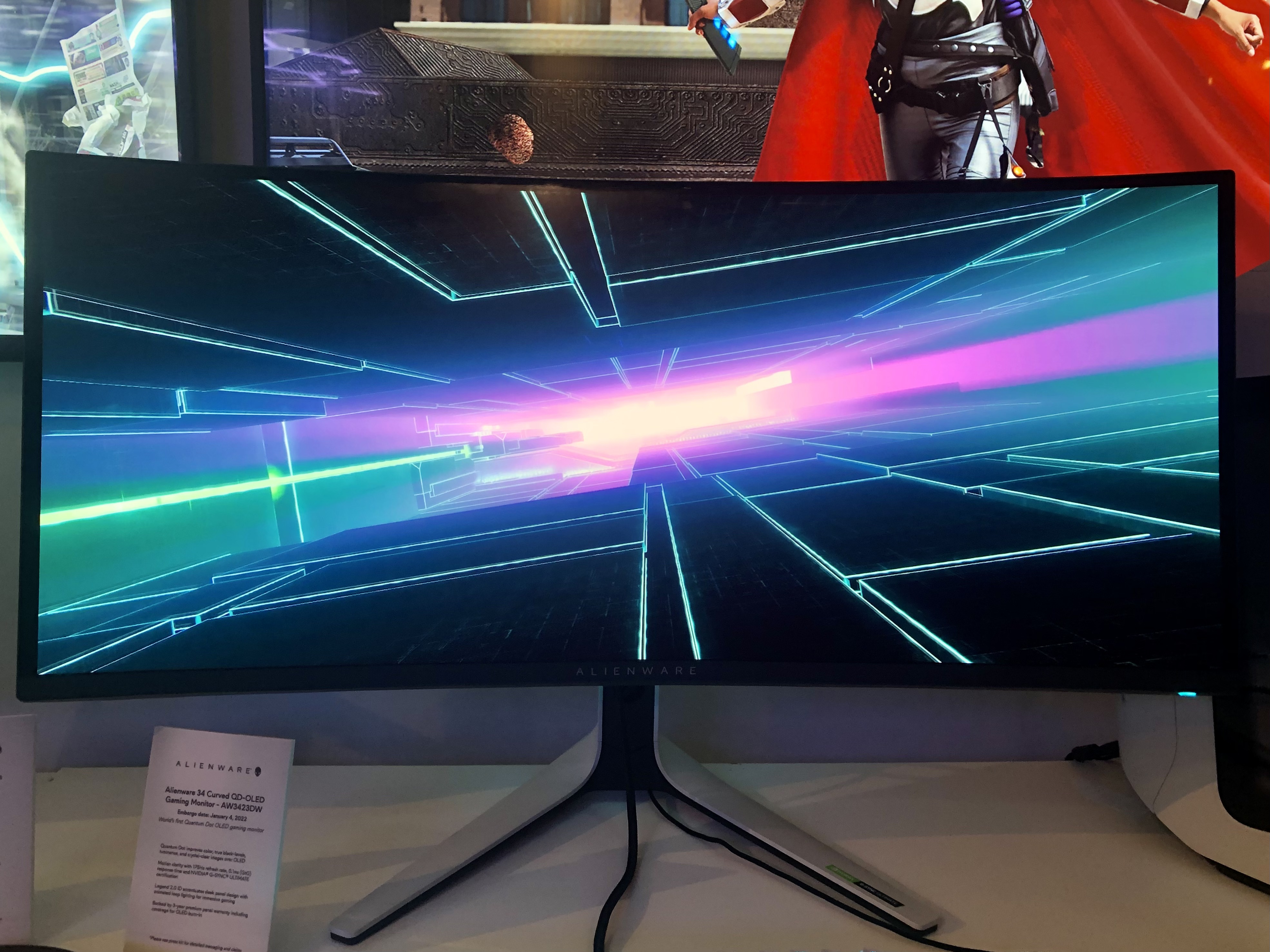

Dell's Alienware monitor that uses Samsung's quantum dot OLED (QD-OLED) tech will arrive this spring for a surprisingly reasonable

$1,299, the company

announced via a tweet. Dell

first unveiled the curved, 34-inch gaming display at CES promising the ultra-high contrast of OLED displays with improved brightness, color range and uniformity.

That price might not seem cheap, but other OLED monitors can cost far more. LG's 32-inch UltraFine OLED model, while not exactly ideal for gaming,

costs $3,999, and even its

27-inch UltraFine model is

$2,999. Alienware’s 55-inch OLED gaming monitor currently sits at $2,719 on

Amazon, and

was first released in 2019 for $3,999 — size is obviously a factor here, but it’s still nice to see a monitor that wields new QD-OLED tech sitting well below the $2,000 mark.

The Alienware model has specs more designed for gamers than content creators, though, with 3,440 x 1,440 of resolution, a 175 Hz refresh rate, 99.3 percent DCI-P3 color gamut, 1,000,000:1 contrast ratio and 250 nits of brightness with 1,000 nits peak. It also offers HDR, conforming to the minimum DisplayHDR True Black 400 standard for OLED displays. At 250 nits, it's expected to have a lower typical brightness than many modern LED monitors.

Samsung's

QD-OLED technology uses blue organic light-emitting diodes passed through quantum dots to generate red and green. That compares to standard OLED, which uses blue and yellow OLED compounds. Blue has the strongest light energy, so QD-OLED in theory offers more brightness and efficiency. Other advantages include a longer lifespan, more extreme viewing angles and less potential burn-in. Long story short, Samsung's QD-OLED panel is supposed to be like traditional OLED, which is known for its impressive contrast brought on by rich, deep blacks, but with more consistently vivid color across brightness levels.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23142451/Screen_Shot_2022_01_03_at_1.51.06_PM.png)