- Joined

- Mar 29, 2016

- Messages

- 3,732

- Reaction score

- 0

If Aliens Attack: Visitors to Earth Will Likely Be Robots

Link Source : (Space.com) : https://www.space.com/11090-aliens-attack-visitors-earth-robots.html

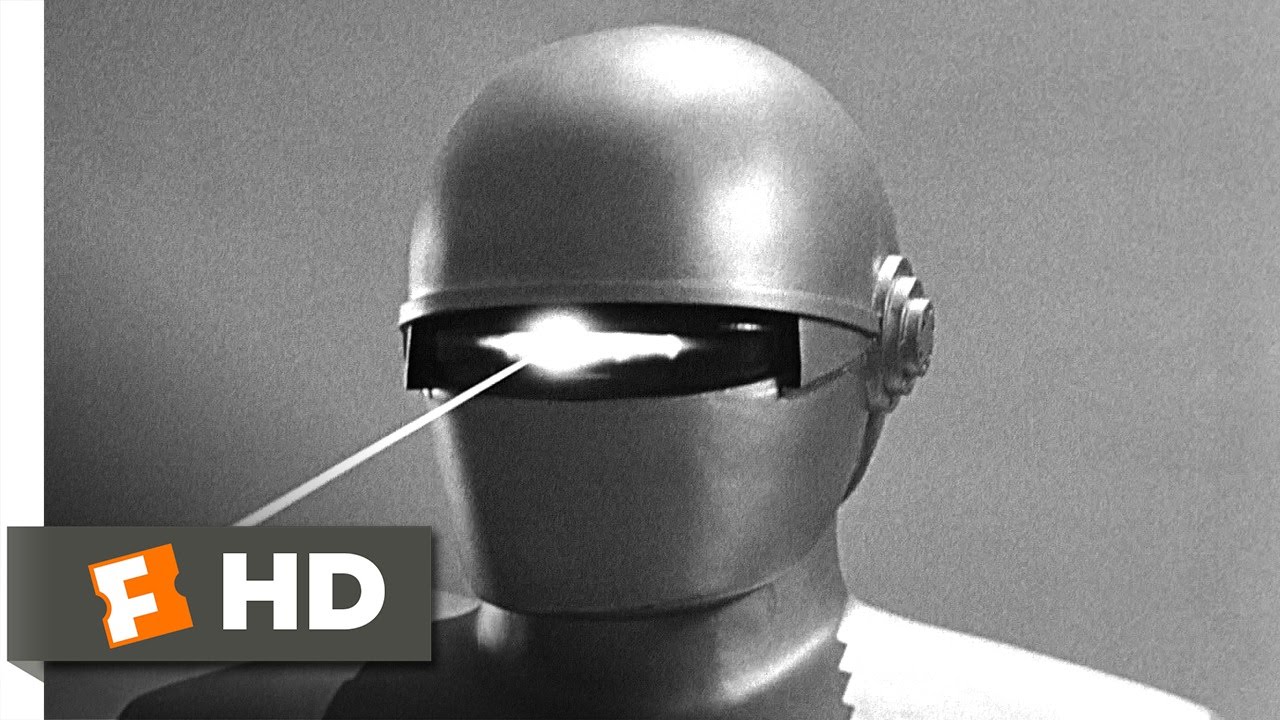

The alien invaders in the new movie "Battle: Los Angeles" are anything but friendly looking. Mechanical components are built right into their stringy bodies, a weapon protrudes from an arm, and machinery bristles along their armored exoskeletons. But like us, the aliens possess slimy internal organs and they can bleed.

This portrayal of extraterrestrials, however, strikes some scientists as unrealistic. Should any real visitors — or conquerors — from space come to our planet, the scientific odds strongly suggest the aliens will be completely artificial forms of life.

"If an extraterrestrial spaceship ever lands on Earth, I bet you that it is 99.9999999 percent likely that what exits that ship will be synthetic in nature," said Michael Dyer, a professor of computer science at the University of California, Los Angeles (appropriately enough)

In civilizations advanced enough to travel between the stars, it is quite likely that machines have supplanted their biological creators, some scientists argue. Automatons — unlike animals — could withstand the hazards to living tissue and the strain on social fabrics posed by a long interstellar voyage.

Furthermore, nonliving beings would not have to worry all that much about the environmental conditions at their destination — if the planet is hot or cold, bacteria-ridden or sterile, has oxygen in the air or is airless, machines would not care.

In short, don't expect cuddly, squishy E.T.s to come calling someday; instead, picture robots descending from the sky.[Ten Alien Encounters Debunked]

"I fully agree that anyone likely to visit will not be biological beings attacking us like in 'Battle: Los Angeles,'" said Seth Shostak, senior astronomer at the SETI Institute.

"Anyone coming here would likely be confronting many of the same problems that we as biological life would encounter in space," Shostak said.

How robots replace us (and living aliens)

Dyer has identified four paths that could lead to the substitution of humans or other biologicals by their own robotic creations — and given enough time, he thinks such a fate awaits most life in the cosmos.

"I believe that we will be replaced within a few hundred years by synthetic agents much more intelligent than ourselves," Dyer said, and these hardy heirs will be far better suited for colonizing distant worlds.

The first path for robotic domination, logically enough, is through dependence. An ever-increasing reliance on machines to do work for us will one day reach a critical juncture when these sentient bots decide to cast off the yokes of their air-breathing overseers.

The second slippery slope to robots replacing humans is a new sort of arms race that has echoes of "Terminator," wherein nations construct cybernetic fighting forces that get of control.

"Each country is forced to build intelligent, autonomous robots for warfare in order to survive against the intelligent, autonomous robots that are being built by other countries that might attack them," Dyer said. Human oversight could be lost because "the enemy side has no control of the robots that are trying to kill them."

From brains to bytes

The machine takeover need not be violent, however, and could represent a transformational leap for humanity based, perhaps, on our desire for immortality.

Scientists have long projected that technology will eventually reach the point where our brain-based consciousnesses can be transferred to synthetic media, and Dyer sees this as the third path to machine supremacy.

Futurist Ray Kurzweil has famously promoted the idea of such a man-machine convergence as the "singularity" and he has foretold 2045 as the year in which it happens.

Continuing leaps forward in artificial intelligence (AI) — brought to popular attention recently by IBM's Watson supercomputer vanquishing its human champions in the quiz show "Jeopardy!" — imply that machines will eventually be able to think for themselves.

Into the void

The fourth way robust mechanical entities ascend is through forays into interstellar space. More than likely, "space travel will simply be too difficult for biological entities," Dyer said.

"Traveling from one star system to another is hard and it's slow," Shostak agreed.

Even zipping along at close to the speed of light — the universal speed limit — reaching the nearest star system, Alpha Centauri, would take voyagers on the order of half a decade. The energy requirements for accelerating a spaceship to speeds appropriate for cosmic excursions, however, are daunting, Shostak said.Habitable planets could very well be centuries apart.

The journey itself poses major challenges to our bodies, fragile bags of water stitched together by proteins that they are. One requisite is shielding to protect a starship's occupants from damaging radiation showers precipitated by the impact of interstellar gas and dust particles against a fast-moving ship's hull. And good luck keeping a society healthy and functioning in a closed environment for many human generations, some of which will never know life outside of the ship.

"Imagine being radiated for thousands of years in a 'tin can' traveling through space, with all urine recycled while trying to rear generations of offspring and still maintaining civilization," Dyer said.

Shostak joked: "Just watch reality TV to see how quickly people want to boot you off the island."

Robots, on the other hand, would have a much easier time of things. "Synthetic bodies can remain dormant and be awakened by a simple timer," Dyer pointed out. "If the new planet is inhospitable to biological entities, it won't matter, because the space travelers will not be biological."

Space radiation also would not be such a problem to machines. Being immortal and all, they might not feel the need to zoom through the cosmos so fast that interstellar particles turn into cancer-causing or circuit-scrambling missiles.

The inevitability of AI

Perhaps the strongest argument for why alien visitors will not be made of meat is the time scales available to extraterrestrial technological development.

The universe is approximately 13.7 billion years old; Earth, about 4.5 billion, and life about 3.8 billion. Intelligent life has been around on our planet for several million years; human civilization emerged just in the last ten thousand years. And it is only in the last century or so that we have devised the means to broadcast into space (radios) and taken baby steps into our own solar system with unmanned probes.

Assuming that our pace of evolutionary and technological development is par for the course, though, civilizations that sprung up around the universe's first Sun-like stars could already have several billion years under their belts.

In seeking greater control over their existence by becoming more intelligent, just as we have, these societies should end up becoming "postbiological," said Steven Dick, a former chief historian at NASA.

"If you have a civilization that’s thousands, millions, or even billions of years older than us, it will have gone through cultural evolution and I think the likelihood is very good that they won't be like us, and will be AIs," Dick said.

Resistance is futile

Regardless of how machines ultimately end up in charge, their expansion into space seems certain — whether to obtain new resources or to explore (or, for less appealing motives, to exterminate all biological life).

Science fiction has multiple illustrative examples, from the all-mechanical beings in "Transformers" to the hybrid biomechanical Borg of "Star Trek," the latter representing a likely transitional phase from the pure biological to the postbiological. The Cylons of "Battlestar Galactica" present an interesting counterexample of machines incorporating living tissues into spacecraft for abilities such as healing, and, oddly enough, thinking. [The Future Is Here: Cyborgs Walk Among Us]

At any rate, the future is not terribly bright for homo sapiens, at least in a flesh-and-blood form. "I think the most we can hope for is to embed software into all intelligent synthetic entities to cause them to want to protect the survivability of biological entities, with humans at the top of the list for protection," Dyer said.

But even this scenario brings up dilemmas, Dyer noted.

"I can foresee my robotic master not letting me do any activities that it deems will be harmful to my long-term survival," Dyer said, "so I'm no longer allowed to eat ice cream while lying on the sofa watching junk TV shows."

Or the latest alien-invasion, Hollywood-popcorn flick.

-----------------------

Link Source : (Space.com) : https://www.space.com/11090-aliens-attack-visitors-earth-robots.html

The alien invaders in the new movie "Battle: Los Angeles" are anything but friendly looking. Mechanical components are built right into their stringy bodies, a weapon protrudes from an arm, and machinery bristles along their armored exoskeletons. But like us, the aliens possess slimy internal organs and they can bleed.

This portrayal of extraterrestrials, however, strikes some scientists as unrealistic. Should any real visitors — or conquerors — from space come to our planet, the scientific odds strongly suggest the aliens will be completely artificial forms of life.

"If an extraterrestrial spaceship ever lands on Earth, I bet you that it is 99.9999999 percent likely that what exits that ship will be synthetic in nature," said Michael Dyer, a professor of computer science at the University of California, Los Angeles (appropriately enough)

In civilizations advanced enough to travel between the stars, it is quite likely that machines have supplanted their biological creators, some scientists argue. Automatons — unlike animals — could withstand the hazards to living tissue and the strain on social fabrics posed by a long interstellar voyage.

Furthermore, nonliving beings would not have to worry all that much about the environmental conditions at their destination — if the planet is hot or cold, bacteria-ridden or sterile, has oxygen in the air or is airless, machines would not care.

In short, don't expect cuddly, squishy E.T.s to come calling someday; instead, picture robots descending from the sky.[Ten Alien Encounters Debunked]

"I fully agree that anyone likely to visit will not be biological beings attacking us like in 'Battle: Los Angeles,'" said Seth Shostak, senior astronomer at the SETI Institute.

"Anyone coming here would likely be confronting many of the same problems that we as biological life would encounter in space," Shostak said.

How robots replace us (and living aliens)

Dyer has identified four paths that could lead to the substitution of humans or other biologicals by their own robotic creations — and given enough time, he thinks such a fate awaits most life in the cosmos.

"I believe that we will be replaced within a few hundred years by synthetic agents much more intelligent than ourselves," Dyer said, and these hardy heirs will be far better suited for colonizing distant worlds.

The first path for robotic domination, logically enough, is through dependence. An ever-increasing reliance on machines to do work for us will one day reach a critical juncture when these sentient bots decide to cast off the yokes of their air-breathing overseers.

The second slippery slope to robots replacing humans is a new sort of arms race that has echoes of "Terminator," wherein nations construct cybernetic fighting forces that get of control.

"Each country is forced to build intelligent, autonomous robots for warfare in order to survive against the intelligent, autonomous robots that are being built by other countries that might attack them," Dyer said. Human oversight could be lost because "the enemy side has no control of the robots that are trying to kill them."

From brains to bytes

The machine takeover need not be violent, however, and could represent a transformational leap for humanity based, perhaps, on our desire for immortality.

Scientists have long projected that technology will eventually reach the point where our brain-based consciousnesses can be transferred to synthetic media, and Dyer sees this as the third path to machine supremacy.

Futurist Ray Kurzweil has famously promoted the idea of such a man-machine convergence as the "singularity" and he has foretold 2045 as the year in which it happens.

Continuing leaps forward in artificial intelligence (AI) — brought to popular attention recently by IBM's Watson supercomputer vanquishing its human champions in the quiz show "Jeopardy!" — imply that machines will eventually be able to think for themselves.

Into the void

The fourth way robust mechanical entities ascend is through forays into interstellar space. More than likely, "space travel will simply be too difficult for biological entities," Dyer said.

"Traveling from one star system to another is hard and it's slow," Shostak agreed.

Even zipping along at close to the speed of light — the universal speed limit — reaching the nearest star system, Alpha Centauri, would take voyagers on the order of half a decade. The energy requirements for accelerating a spaceship to speeds appropriate for cosmic excursions, however, are daunting, Shostak said.Habitable planets could very well be centuries apart.

The journey itself poses major challenges to our bodies, fragile bags of water stitched together by proteins that they are. One requisite is shielding to protect a starship's occupants from damaging radiation showers precipitated by the impact of interstellar gas and dust particles against a fast-moving ship's hull. And good luck keeping a society healthy and functioning in a closed environment for many human generations, some of which will never know life outside of the ship.

"Imagine being radiated for thousands of years in a 'tin can' traveling through space, with all urine recycled while trying to rear generations of offspring and still maintaining civilization," Dyer said.

Shostak joked: "Just watch reality TV to see how quickly people want to boot you off the island."

Robots, on the other hand, would have a much easier time of things. "Synthetic bodies can remain dormant and be awakened by a simple timer," Dyer pointed out. "If the new planet is inhospitable to biological entities, it won't matter, because the space travelers will not be biological."

Space radiation also would not be such a problem to machines. Being immortal and all, they might not feel the need to zoom through the cosmos so fast that interstellar particles turn into cancer-causing or circuit-scrambling missiles.

The inevitability of AI

Perhaps the strongest argument for why alien visitors will not be made of meat is the time scales available to extraterrestrial technological development.

The universe is approximately 13.7 billion years old; Earth, about 4.5 billion, and life about 3.8 billion. Intelligent life has been around on our planet for several million years; human civilization emerged just in the last ten thousand years. And it is only in the last century or so that we have devised the means to broadcast into space (radios) and taken baby steps into our own solar system with unmanned probes.

Assuming that our pace of evolutionary and technological development is par for the course, though, civilizations that sprung up around the universe's first Sun-like stars could already have several billion years under their belts.

In seeking greater control over their existence by becoming more intelligent, just as we have, these societies should end up becoming "postbiological," said Steven Dick, a former chief historian at NASA.

"If you have a civilization that’s thousands, millions, or even billions of years older than us, it will have gone through cultural evolution and I think the likelihood is very good that they won't be like us, and will be AIs," Dick said.

Resistance is futile

Regardless of how machines ultimately end up in charge, their expansion into space seems certain — whether to obtain new resources or to explore (or, for less appealing motives, to exterminate all biological life).

Science fiction has multiple illustrative examples, from the all-mechanical beings in "Transformers" to the hybrid biomechanical Borg of "Star Trek," the latter representing a likely transitional phase from the pure biological to the postbiological. The Cylons of "Battlestar Galactica" present an interesting counterexample of machines incorporating living tissues into spacecraft for abilities such as healing, and, oddly enough, thinking. [The Future Is Here: Cyborgs Walk Among Us]

At any rate, the future is not terribly bright for homo sapiens, at least in a flesh-and-blood form. "I think the most we can hope for is to embed software into all intelligent synthetic entities to cause them to want to protect the survivability of biological entities, with humans at the top of the list for protection," Dyer said.

But even this scenario brings up dilemmas, Dyer noted.

"I can foresee my robotic master not letting me do any activities that it deems will be harmful to my long-term survival," Dyer said, "so I'm no longer allowed to eat ice cream while lying on the sofa watching junk TV shows."

Or the latest alien-invasion, Hollywood-popcorn flick.

-----------------------