It is a tough time to be a gamer in need of a graphics card upgrade. There are some excellent options for gaming on the PC, but with the

cryptocurrency gold rush that has taken place over the past several months, it can be difficult to (A) find certain graphics in stock and (B) not pay an inflated price for one. Digital coin miners have created a shortage, and if AMD's

Radeon RX Vega 64 proves to be a mining power house as rumored, we could be looking at a similar situation for many months to come.

One of the staff members at OCUK claims the hash rate on "Vega" is 70-100 million hashes per second (MH/s). He does not specify which Vega graphics card, but it is a safe assumption it would be the higher end Radeon RX Vega 64 that might be capable of approaching 100 MH/s. And as he indicates, anywhere close to that is "insanely good."

"Trying to devise some kind of plan so gamers can get them at MSRP without the miners wiping all the stock out within 5 minutes of product going live," OCUK says.

Therein lies the problem for gamers. There are certain types of cryptocurrencies that are best mined with graphics cards. One of them is

Ethereum, and it has

skyrocketed in value over the last several months. At the beginning of the year, it was trading at around $8. It's currently valued at just under $224, and at one point recently, it hit the $400 mark.

The good news here is that nothing is yet official, though rumors of Radeon RX Vega 64 being great at mining are coming from multiple corners of the web. Videocardz, for example, claims to have heard from a "good source" that AMD has informed its

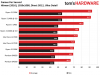

hardware partners of the situation, presumably so they can brace for a shortage. The source also says the hash rate will be lower than 100 MH/s, but still at least double that of the Radeon RX Vega Frontier Edition, which has a hash rate of around 30 MH/s.

To put these numbers into perspective, most of the

higher end cards that digital coin miners flock to have hash rates ranging from 20 MH/s to 30 MH/s. If the Radeon RX Vega 64 is significantly better at mining Ethereum, which is still trading high, then it's a safe bet there will be a shortage no matter how hard AMD tries to keep up.